Industrial revolutions in the past have been driven mainly by groundbreaking technologies that redefined both paradigms of the production of goods and services and approaches to management processes (Kaur, Gandolfi, 2023). As production becomes more advanced and as digitization and information and communication technologies come into play, contemporary management processes are progressing toward a higher level of automation (Jafari et al., 2022; Luo et al., 2021). Until recently, researchers were interested mainly in problems related to the implementation of IT tools designed to support the HR function (Obeidat, 2016; Wirtky et al., 2016). Today, however, as algorithmic technologies are put to work, personnel (HR) processes are changing and evolving, and they are increasingly based on data analyses and data processing (Berhil, et al., 2020). The use of artificial intelligence (AI) technologies in HR management processes has become the main trend that businesses consider, and researchers investigate (Jiaping, 2022). However, despite the multitude of approaches to the use of digital technologies in HR management in business contexts (Glikson & Woolley, 2020; Luo et al., 2021; Tambe et al., 2019). There are little to no references to the psychological aspects of employees working in environments that expose them to technologies based on artificial intelligence.

Given the extraordinary pace of the evolution of AI tools, it is necessary not only to know the basic capabilities and available use cases but also to anticipate and adapt to changes brought by technological progress when it comes to human resources management processes. In particular, it is necessary to understand the potential impact of artificial intelligence technologies on the behavior of HR employees, which is a significant research gap. Although research is underway into the ethical aspects of using artificial intelligence (Haenlein et al., 2022; Jagger, Siala, 2015; Lambrecht, Tucker, 2019; Vemuri, 2014), other aspects of using AI in the HR sphere are only now being tested.

This paper aims to bridge this gap, to identify and present both the opportunities and the main psychological risks, or dangers, that employees will face when exposed to systems based on artificial intelligence. This knowledge is particularly important for HR department managers, as these people will have to deal with the burden of resolving problems that modern technologies will bring. In order to achieve that aim, Polish and international literature sources have been studied using the systematic literature review (SLR) method (Czakon, 2011; Czakon et al., 2015) to identify, assess, and interpret the existing relevant research.

During Industry 1.0 (circa 1750–1870), the mechanization of production began, leading to the emergence and development of new business sectors. The use of formal methods, procedures, and organizational tools was marginal (Aggerholm et al., 2011). The role of personnel departments was merely one of allocating work and ensuring that the work was done efficiently. The staff members in this role were responsible for providing a physically safe factory environment and for remunerating workers (Listwan, 2002). Therefore, that period is referred to as HR 1.0, although precursors to contemporary HR departments can hardly be found there.

It was not before Industry 2.0 (1870–1960) that work processes became significantly standardized, and efforts focused on finding ways to increase the degree of management control and improve the efficiency of work (Stuss, 2023). At the start of the 1890s, many business owners began to provide their personnel with various workplace facilities and benefits for their families (such as cafeterias, medical care, recreational activities, libraries, magazines, and residential premises). It was often the case that a new position was created for this role. It was known as welfare secretary and offered mainly to a woman or a social worker (Bacon, 2007). This was much influenced by, inter alia, Elton Mayo, who supported his ideas with the results of a research project conducted in the 1920s known as the Hawthorne studies. It was argued that productivity was directly linked with job satisfaction, that people would be highly productive, and that people’s work if they were cared for by someone they respected. At that time, personnel managers focused on humanism (relying on the research work of Likert and McGregor). They claimed that human factors played a key role in studying organizational behavior and that people should be responsible and progress-oriented (Armstrong, 2002). In summary, the development of the personnel function at that time was based on the belief that personnel and their involvement in decision-making processes deserved more recognition and that a more participatory management style should be adopted. Many managers thought this initiative forced them to integrate socioeconomic divisions, which was unfortunately rejected. In such cases, management processes (known as HR 2.0 today) and work processes became more standardized. Nonetheless, few organizations that put the theory developed in the emerging field of research into business practice recognized its potential for increased work efficiency linked with increased business efficiency by moving towards more participatory management practices (Makieła et al., 2021).

The years between 1960 and 2000 brought another technological change known as Industry 3.0. At that time, HR managers treated employees as a business resource. This concept is said to have been introduced by E.R. Miles (Miles & Rosenberg, 1982). HR management was focused on moving towards advisory and strategic areas by implementing highly advanced programs (Pocztowski, 2007). HR departments were presented with a unique opportunity to redefine their role within organizations by morphing from recruitment, training, and administrative services providers to strategic partners for managers to support their organizations’ business operations. It seems obvious and natural that HR departments should make use of the knowledge of their organizations that they undoubtedly have to identify the potential needs of their organizations as a whole (Ulrich & Grochowski, 2018).

At the end of the 1990s, Urlich proposed a new approach to the work of HR departments with a new role, namely the HR Business Partner. According to this approach, the HR Business Partner is a strategic partner, an administrative expert, an employee champion, and a change agent (Ulrich & Beatty, 2001). Consequently, the main role of HR departments (HR 3.0) was to act as a leader integrating the tasks of all departments within the organization, an employee advocate, a human capital developer, a functional expert, and a strategic partner (Ulrich et al., 2010). On the one hand, these roles configure and develop proper relations among all the people within an organization. On the other, they deliver the organization’s key strategic objectives. An analysis of that period shows that initially, measures were taken to reduce employee numbers by cutting wages, implementing career development programs, resolving employment disputes through arbitration, transferring intellectual capital and profits to take advantage of better tax rates, closing pension schemes, hiring people on part-time contracts, including part-time employment contracts, and reducing or canceling employee benefits. This tendency resulted from the 2007–2009 global economic crisis (Makieła et al., 2021).

After 2010, another evolutionary change in the HR function was to open HR departments to the outside world, combined with talent management (Stuss, 2021). Global industry saw the arrival of a new era, Industry 4.0. The change resulted from the global lack of workforce commitment, with people opting for independent work and being their bosses rather than employment. There was a shortage of candidates ready to take on employment in most positions, and large amounts of money were spent on recruiting and hiring people in roles vital for organizations (Stańczyk & Stuss, 2021) These measures are taken even today, and that is why HR specialists need to assess needs and develop new approaches to the HR function on an ongoing basis while taking into account the huge impact that technology has on their work (Mantzaris & Myloni, 2023).

In the fourth industrial revolution, Industry 4.0, challenges include not only a search for ways to motivate employees, mostly those referred to as talent (Ganiyu et al., 2021), but also the belief that robots will replace not only human muscles but human brains as well. It is not only technically possible but also increasingly profitable (Mantzaris & Myloni, 2023). In summary, HR departments should use new methods and approaches based on artificial intelligence and assign employees to meaningful roles in accordance with HR 4.0.

This paper aims to bridge this gap by identifying and presenting the opportunities and the main psychological risks or dangers employees will face when exposed to systems based on artificial intelligence. In our research, we used formal approaches and systematic methods to locate, select, evaluate, summarize, and report the references collected (Denyer & Tranfield, 2009; Rowley & Slack, 2004) on ethical aspects of AI in HR departments. Exploring research gaps using a systematic literature review provides important support in presenting efforts to identify challenges facing future research (Amui et al., 2017; Mariano et al., 2015). A systematic review of references on the HR function, HR department, the evolution of the HR process, and the second part: AI and the ethic of AI concept, made it possible for us to analyze the existing body of work and then prepare for future empirical research by gathering relevant resources of knowledge available on this subject, as well as by varying research directions (Denyer & Tranfield, 2006; Kitchenham, 2004).

Search terms were checked in the Scopus database due to their significance as a subscription-based online service for indexing scientific citations. Only scientific articles, monographs, chapters from monographs, and review articles were retained. Data extraction methods were used for the systematic review to minimize possible errors and biases in this study, which required documentation of all diagnostic stages. Data extraction included general information such as title, author, or authors, and publication details (Jabbour, 2013) - Table 1.

Results of the search process and articles remaining after analysis

| According to the SCOPUS database | HR | AI | HR + AI |

|---|---|---|---|

| Subject areas including Business, Management and Accounting, Economics, and Social Sciences. All documents | 20336 | 64 850 | 367 |

| Articles | 6 469 | 34 108 | 164 |

| In English | 4 996 | 31 507 | 161 |

| After 2020 | 4 772 | 21 610 | 140 |

| Content Verified Based on Abstracts | 61 |

Source: own elaboration.

The adopted research process allowed the identification and analysis of the references obtained based on the coding system, leading to the identification of research gaps in the literature. All the results of this search were then narrowed down by applying refinement mechanisms by field to business, management, accounting, and social sciences. Furthermore, the search was narrowed down to texts in English since it was the dominant language in all literature items.

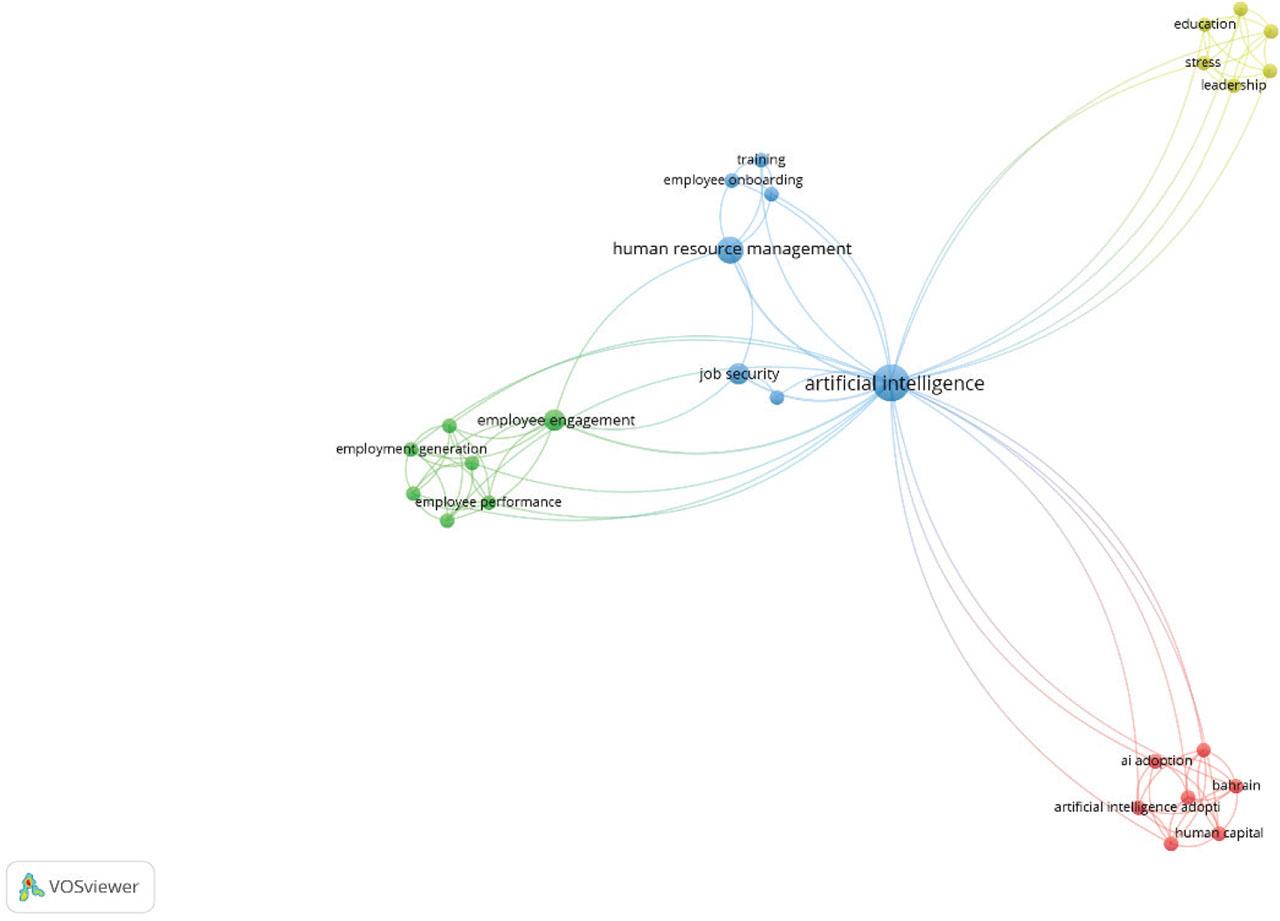

The next articles were analyzed using the VOSviewer software (version 1.6.20), a tool for constructing and visualizing bibliometric networks. These networks were used to construct and visualize the co-occurrence of important terms in the literature. The terms of the abstracts that appeared most frequently in the networks were used to develop analysis categories, as presented in Figure 1.

Keyword mapping for the concept

Source: Own elaboration based on a systematic review of the literature.

The final step was an analysis of the content of the selected papers. In the analysis process, interrelated information was arranged into parts and encoded (Kepplinger, 1989), so that all the logically possible interrelations between these objects and statements about other factors could be identified later (Elo et al., 2014). This methodology allowed the researchers to gather source materials of significance for further analyses.

Extension transference was coined in the first half of the 20th century by Edward Hall, an American anthropologist who studied human behavior in cultural contexts. In one of his best-selling monographs, Beyond the Culture, Hall developed the idea of extension transference, which sees technological items as extensions of the human organism: a vehicle as an extension of a person’s legs, a telephone as an extension of a person’s voice or a pair of binoculars as an extension of a person’s eye. The role of these extensions is to help people adapt to the environment in which they live. According to Hall, technological items or inventions, some of which are such extensions, helped humans outsmart nature by skipping over a long process of evolutionary adaptation to the environment, making it possible for them to live under unfavorable conditions. Another invention, such as an extension that may completely change people’s lives and affect all areas of their lives, is artificial intelligence (Hall, 1976).

Artificial intelligence (AI) is not a new idea. It emerged as long ago as 1956, and as a research direction, it aims to conceptualize and present intelligent behavior as a computational process. Artificial intelligence is commonly understood as a branch of research into information technologies and computer science, focusing on the design of smart systems (Gînguță et al., 2023) – systems that have a huge impact on large parts of societies and economies (Saghiri et al., 2022).

In terms of its practicality, artificial intelligence has become one of the most attractive and groundbreaking technologies in recent years (Yigitcanlar et al., 2020). It is a widely discussed research topic across engineering, science, education, medicine, and economics. It needs to be noted that AI is described as computer intelligence, a simulation of the human intellect, or a mental machine (Sadok et al., 2022).

Scientific literature offers a variety of definitions of artificial intelligence. AI is defined as a knowledge-based system that analyses the surroundings, interprets perceptions, solves issues, and determines solutions to accomplish specific tasks for which it was designed (Gînguță et al., 2023). Artificial intelligence is being developed primarily for speech recognition, machine learning, planning, and problem-solving (Joiner, 2018). Artificial intelligence may, therefore, be seen as a new way of programming computers to teach them to think the way that humans do (Fenwick & Molnar, 2022; Sarker, 2022). On the other hand, artificial intelligence is characterized by studying computations that allow for perception, reasoning, and action (Joiner, 2018). For this reason, Russell and Norvig describe different approaches to artificial intelligence. They involve the simulation of human thinking, reasonable thinking, human action, and reasonable action through the use of a programmable machine (Sabharwal & Selman, 2011). Artificial intelligence has gained importance in recent decades as technology has developed to such an extent that applications are already available for independent decision-making that is similar to human intelligence and programmed for decision-making without human intervention (Kaur & Gandolfi, 2023).

It follows that artificial intelligence, when seen as an extension (according to Hall), will be an extension of the human brain. It is not the first extension of the most complex human organ and one that has not been explored fully. It is, however, undoubtedly one of those extensions that may revolutionize people’s lives on a grand scale, the way the computer has. Artificial intelligence is seen as the cornerstone of work across various industries, as the AI implementation process has accelerated in recent years (Mathis, 2018). It is used in the IT sphere to design machines that perform tasks that would require intelligence if they were done by humans (Fjelland, 2020). In the business world, AI-based systems with access to large amounts of data can be used in management, accounting, finance, HR, sales, and marketing, potentially increasing revenue and reducing business costs. Artificial intelligence and the continuous learning ability of AI-based software increase innovation, improve process optimization, and enhance HR management and quality (Cioffi et al., 2020).

AI-based technologies are slowly creeping into the HR management sphere as well. Eightfold, a company that implements AI solutions at organizations, conducted a survey in 2022 among 259 managers in at least senior positions at HR departments of US companies. The survey shows that most of these companies already benefit from artificial intelligence. AI-based systems are used in personnel management (77%–78%), recruitment and hiring (73%), performance management (72%), and onboarding new employees (69%). Asked about the use of AI in the future, 92% of HR leaders intend to increase the use of artificial intelligence in at least one HR area. The top five areas are performance management (43%), payroll processing and benefits administration (42%), recruitment and hiring (41%), onboarding new employees (40%), and employee records management (39%). Most managers plan to increase AI use within 12 to 18 months.

However, it must be noted that AI-based technologies are not widely used by organizations worldwide, including in HR management. These results should, therefore, be seen as evidence of a growing trend rather than a picture of the global reality. All the same, artificial intelligence offers a variety of benefits, and these benefits are a great opportunity for organizations and their employees to improve their performance. However, the use of AI will bring new challenges, which are discussed below.

AI-based technologies offer a variety of opportunities for improvement and cost-cutting in HR management (Renkema et al., 2016) by combining HR function elements with cognitive technologies such as mobile devices, robotics, and the Internet (Kaur & Gandolfi, 2023). They allow organizations to take advantage of their competitive edge by using human capital better (Baker, 2010). In particular, artificial intelligence can redesign the HR management framework of an organization into an integrated system with outstanding performance to improve personnel planning, personnel analytics, virtual assistants for self-service or the provision of HR services, to develop career paths, leadership and coaching (Mantzaris & Myloni, 2023), and to design and develop employees welfare measures. Artificial intelligence uses algorithms to build accurate and reliable information databases (IDBs), allowing for instant access to and fast transfers of information in the IDBs, increasing human resources’ potential (Bujold et al., 2023). This, in turn, allows AI to analyze data faster and more extensively than humans can do, with outstanding accuracy, establishing itself as a reliable tool (Chen, 2023). AI-based systems can gather and assess large amounts of data beyond the analytical capability of the human brain, providing users with recommendations in decision-making processes(Shaw et al., 2019).

An important area noticeably influenced by artificial intelligence is recruitment. In this area, AI-based solutions may potentially provide an AI-user organization with a competitive edge and help it embrace talent better than its competitors do, which may help the organization make its business more competitive (Köchling et al., 2023). Artificial intelligence can also be used to promote self-study in the workplace through database management (Wilkens, 2020). This gives the manager access to all records, which he or she can use when necessary. AI can also support decision-makers through the use of big data and machine learning algorithms (Wamba-Taguimdje et al., 2020). In addition, artificial intelligence offers an inclusive approach to training, personnel development, and work performance assessment. AI-based systems can make the decision-making process intuitive, (Esteva & Topol, 2019), prepare HR calendars of activities, and assess the productivity of such decisions and such tools (Kiran et al., 2022) Artificial intelligence can be used in HR to track and assess work performance, measure productivity, provide real-time feedback, and identify areas for improvement, including work conditions (Czarnitzki, Fernández, Rammer, 2023; Ryden & El Sawy, 2022).

Organizations, in particular, can enjoy the benefits of AI. Cutting-edge technologies based on AI will allow employees to use their potential better, as they will be better matched to tasks and positions. Artificial intelligence will also "make sure" that working conditions and the safety of employees are optimized (Min et al., 2019; Palazon et al., 2013), improving employees’ physical and psychological well-being. Some systems can be used for real-time monitoring of the emotional states of employees, including the stress that they experience (Lu et al., 2012; Rachuri et al., 2010), which will allow employers to respond quickly and to prevent negative consequences. Bearing in mind the mental health of employees, which translates into work performance and productivity, chatbots were introduced to be tested as a form of support for employees dealing with difficult and stressful situations (Cameron et al., 2017; Oracle & Workplace Intelligence, 2020). Some authors predict that such systems will be ready for use by 2025 (Brassey et al., 2021).

However, it needs to be remembered that artificial intelligence’s benefits, when used to improve the design and organization of work processes for optimized efficiency and personnel performance, may come with certain risks or dangers and be used to the detriment of employees.

The American Psychological Association (APA) defines technostress as a form of occupational stress associated with information and communication technologies such as the Internet, mobile devices, and social media (APA, 2018). Technostress as a concept originally emerged in the 1980s, when offices began to be supplied with equipment such as computers, printers, and photocopiers. This term was introduced and described by Craig Brod, an American psychotherapist, in his book The Human Cost of the Computer Revolution (1985). The past decade has seen the number of published work on technostress growing rapidly (Bondanini, Giorgi, Ariza-Montes, Vega-Muñoz, Andreucci-Annunziata, 2020), including in the areas of business and management (Salazar-Concha, 2021). Some authors point out the negative consequences of experiencing technostress, such as lower job satisfaction, lower commitment to work for organizations (Ragu-Nathan et al., 2008), stress, frustration, and health issues (Weil & Rosen, 1997; Ayyagari, 2011; Riedl, 2012).

One of the most frequently cited technostress concepts is that Tarafdar et al. (2007) proposed. According to this concept, technostress can be split into five components:

Techno-overload, which describes situations where the use of computers forces people to work more and work faster;

Techno-invasion, which describes situations where people cannot cut off work and find it difficult to draw a line between time off work and time at work;

Techno-complexity, which describes situations where employees feel they lack skills, knowledge, and understanding in relation to new technologies and, therefore, believe that they are unable to cope with them;

Techno-insecurity, which is associated with situations where people feel threatened about losing their jobs to machines or other people who have a better understanding of the technologies in use;

Techno-uncertainty, which is associated with situations where people feel they must adapt to new technologies continuously due to the ever-changing nature of their work environments and the fact that work processes are increasingly automated.

The above stressors may also apply to AI-based technologies, although some of them, such as techno-invasion, which should, in this case, have been understood more literally, may have to be redefined. Until recently, with the use of communication technologies (mobile phones or the Internet), techno-invasion was associated with situations where employees are required to stay in touch with their organization at all times, even after work, in a way that is detrimental to what authors refer to as work-life balance (Bencsik & Juhasz, 2023). By monitoring employees’ work and gathering information about them, artificial intelligence interferes with the privacy of individuals even more extensively. The information collected by AI is used to improve employees’ performance, efficiency, and safety, which may benefit both employees and organizations. However, the information may also be used to the detriment of employees – employees may be downgraded to jobs with lower pay or simply dismissed.

Using artificial intelligence to collect personal data and sensitive information is another matter. Modern monitoring systems are capable of recognizing people’s moods and emotions, as well as their interests, lifestyles, and beliefs (OSHA, 2022). It is argued that such systems can also be developed to identify an individual’s sexual orientation (Wang & Kosinski, 2018) or an individual’s religious beliefs (Yaden et al., 2018). When a person’s life is interfered with so extensively, the person’s mental well-being may be impaired, and the person’s fundamental right to privacy is infringed, which raises ethical issues.

Moreover, knowing that they are being monitored and their performance is assessed continuously is a stressor for the employee. It may have a negative impact on their performance and efficiency, which is paradoxically the opposite of the outcome expected by the employer when it decides to use artificial intelligence in its HR function. Psychologists know the idea of social inhibition, a phenomenon where an individual’s performance of difficult or complex tasks deteriorates in the presence of other people compared to their performance when the individual is alone (Pessin & Husband, 1933; Zajonc, 1965). However, we have not found similar studies involving AI, although this correlation seems logical. Some studies confirm that when an employee is monitored at work and their work performance is assessed continuously, the levels of exhaustion, stress, anxiety, and fear of losing the job experienced by the employee increase (Neagu & Vieriu, 2019; Jarota, 2021).

Techno-invasion puts pressure on improving the performance and efficiency of employees, which may cause them to experience techno-overload. Appropriate systems are implemented to monitor the work performance of blue-collar workers (warehouse operatives or transport workers) and white-collar workers. For example, the UK-based Barclays Bank has a system in place that tracks the working time that its employees spend in front of computers and measures the duration of their work breaks (Eurofound, 2020; European Parliamentary Research Service, 2020), requiring them, as it were, to put more effort in their work, which leads to work overload. This reduces the degree of an individual’s control, creating perfect conditions under which the individual will feel stressed and experience negative psychosomatic and organizational consequences.

The creeping of artificial intelligence into organizations is also associated with employees feeling that they may lose their jobs and be replaced by new technologies or employees with a better understanding of new technologies. This danger is described by Tarafdar et al. as techno-insecurity, and some other researchers consider it, in relation to AI-based technologies, as one of the biggest risks for employees (Umasankar Murugesan, Padmavathy Subramanian, Shefali Srivastava, Ashish Dwivedi, 2023). Techno-insecurity affects particularly older employees, who need more time and effort to understand new information and learn new things. This, in turn, may widen the already much-discussed generational gap, leading to more disagreement, prejudice, and conflict in the workplace (Becton, Walker & Jones-Farmer, 2014). Older generations of employees, especially those with low qualifications, which can be replaced easily by artificial intelligence, will also be exposed to techno-complexity and techno-pressure (Nimrod, 2018), which may make them feel less efficient and lower their self-esteem.

One of the few studies (Ayyash, 2023) that look into a correlation between technostress (as Tarafdar understands it) and artificial intelligence and that are based on TAM (Technology Acceptance Model; Davis, 1989) showed that a positive attitude to AI-based technologies is positively correlated with people’s perception of the technology as useful and easy to use. At the same time, such an attitude reduces techno-invasion, techno-complexity and techno-insecurity. It follows that if the level of stress experienced by employees and their aversion to new technologies are to be reduced, employees must receive appropriate training and subject-matter support in the use of AI-based systems. This challenge seems to be the next one (and perhaps the key one) that HR managers should put on their lists of things to do in the future.

The technostress linked with AI-based technologies is not the only psychological risk that employees will be exposed to in the future. Two other risks, or dangers, slightly different, will be discussed below, namely excessive trust in artificial intelligence and biased data.

The trust that one has in other people or objects (such as daily-use equipment) is founded on their reliability. A sense of trust allows one to “deactivate” control measures that otherwise take the time and energy needed to monitor, track, and analyze potential faults, defects, or shortcomings. Many appliances or machines, particularly those of complex design, are equipped with warning features to alert the user to a problem or inform them about how the appliance or machine should work or need repair or simply shut down when failure is detected. Many AI-based systems are not equipped with such warning (or control) features, and the average user cannot quickly check whether the system is working properly. Such systems include candidate selection and recruitment software in the HR sphere. It is software based on algorithms that use databases (Köchling et al., 2023).

AI-based systems do not always make the best possible choices in candidate recruitment processes. An extensive study conducted by a team led by Professor Joseph Fuller (2021) of Harvard Business School among 2,250 business leaders working for organizations in the US, the UK, and Germany showed that an AI-based software system would reject a candidate if he or she did not meet all the required criteria. However, the person may have developed the core skills needed for the vacancy to a high standard. Fuller also discovered other reasons why candidates are rejected at the first stage of the selection process, such as too much time between jobs (a break longer than six months). However, such breaks may happen for reasons beyond the candidate’s control and do not necessarily make the person unsuitable for the position. In 2018, Amazon discontinued using AI-based tools in its staff recruitment processes (BBC, 2018). Amazon made the decision when it turned out that a system based on the company’s established practices that the company used in its recruitment process discriminated against women. The discriminatory nature of the AI-based system resulted from the fact that Amazon’s workers were mostly men, not women. Amazon tried to solve the problem, but with no success, the company’s management abandoned its AI-controlled recruitment practices. AI-based systems use sets of data that may be based on decisions previously made by humans. However, much of the work of cognitive and social psychologists shows that humans do not always make decisions and solve problems reasonably. Moreover, AI algorithms are based on the available data and rely on mere statistics, which does not always have to deliver the best solutions (the Amazon case). Moreover, the same decision may be the right decision in a particular situation and not so much in another situation, and artificial intelligence systems may not always have access to information about such differences. Some researchers argue that in addition to the risk of losing a job or a particular position, biased data is one of the biggest challenges that specialists relying on artificial intelligence to make decisions about other people will face (Umasankar Murugesan et al., 2023). Eightfold’s report (2022) shows that some HR specialists are aware of this challenge: 36% of respondents said that one of the problems with artificial intelligence is the belief that it cannot replace the human decision-making process.

The above-discussed risks, or dangers that employees will be exposed to in the future, are only some of the items in a long list of risk factors that come with technologies based on artificial intelligence. Other items in the list include employees being seen and treated according to data sets produced by artificial intelligence. As a result, employees will be dehumanized and engage in risky behavior only to meet system requirements. They will feel more lonely and socially isolated as a result of less contact with other people when how they spend their free time is closely monitored (OSHA, 2022).

In summary, artificial intelligence is a great opportunity for contemporary organizations, as it provides them with new solutions to the challenges they are faced with (Bharodiya & Gonsai, 2020). However, as with any novelty, much remains unknown about artificial intelligence, which raises anxiety and concerns. Despite the debate on how artificial intelligence will affect people’s lives, we cannot predict all the implications of AI. However, drawing on the available research into the impact of technologies on humans and taking advantage of what we already know about the psychological mechanics of human behavior, it is possible to predict, to some extent, the risk factors that the future will bring. HR managers, in particular, should use this knowledge to minimize the dark side of artificial intelligence.

The emergence of AI-based technologies will revolutionize the work of organizations and, in many areas, will have a major impact on how work is done. The main benefits of AI-based systems are lower labor costs, higher efficiency, and better work performance at the individual and business unit levels and, consequently, entire organizations. Employees, too, will benefit from AI, as AI-based systems will take care of employees’ safety, comfort, and professional development. However, it should be noted that artificial intelligence brings many risks to which employees may be exposed. Some jobs will disappear, and others must be redefined, requiring people to retrain and learn new skills. Far-reaching changes will make employees feel insecure and concerned, leading to higher stress levels and related consequences. Moreover, the data gathered by AI-based systems may be used to the detriment of employees. At this point, It must be noted that the purposes for which AI-based systems will be used will depend on the intentions of those who will manage them. HR departments will play a major role in the implementation and use of AI-based technologies, and much of the burden of the potential risks or dangers will fall on the shoulders of HR managers.

It is natural for humans to adapt to changes in their environments, although such changes are usually stressful when they come. However, when a person understands and becomes accustomed to a new situation, they will modify it as far as possible to make it more bearable and/or better suited to themselves. However, the passage of time may show that the change has brought more good than bad and, in the end, will be accepted. However, suppose the change threatens the person’s resources (including economic, existential, emotional, cognitive, and other assets) or diminishes them. In that case, the person will try to protect or recover them. Artificial intelligence may allow employees to increase their resources by saving time, learning new skills, or being more satisfied with their jobs. If AI is seen as a threat, employees will take action to prevent losing their resources and, for this purpose, may try to cheat a particular system (e.g., Lee et al., 2015).

As artificial intelligence is only now creeping into organizations and is not used as widely as Industry 4.0 solutions, little research is available into the impact of artificial intelligence on individuals. The conducted literature research has shown, on the one hand, initially identified problems concerning the relationship between AI and human resources management processes. This paper only outlines the future or potential challenges HR managers face. It should, therefore, be taken as a starting point for further, more detailed analyses of the increasing body of research into using artificial intelligence in the HR sphere and its impact on employees.