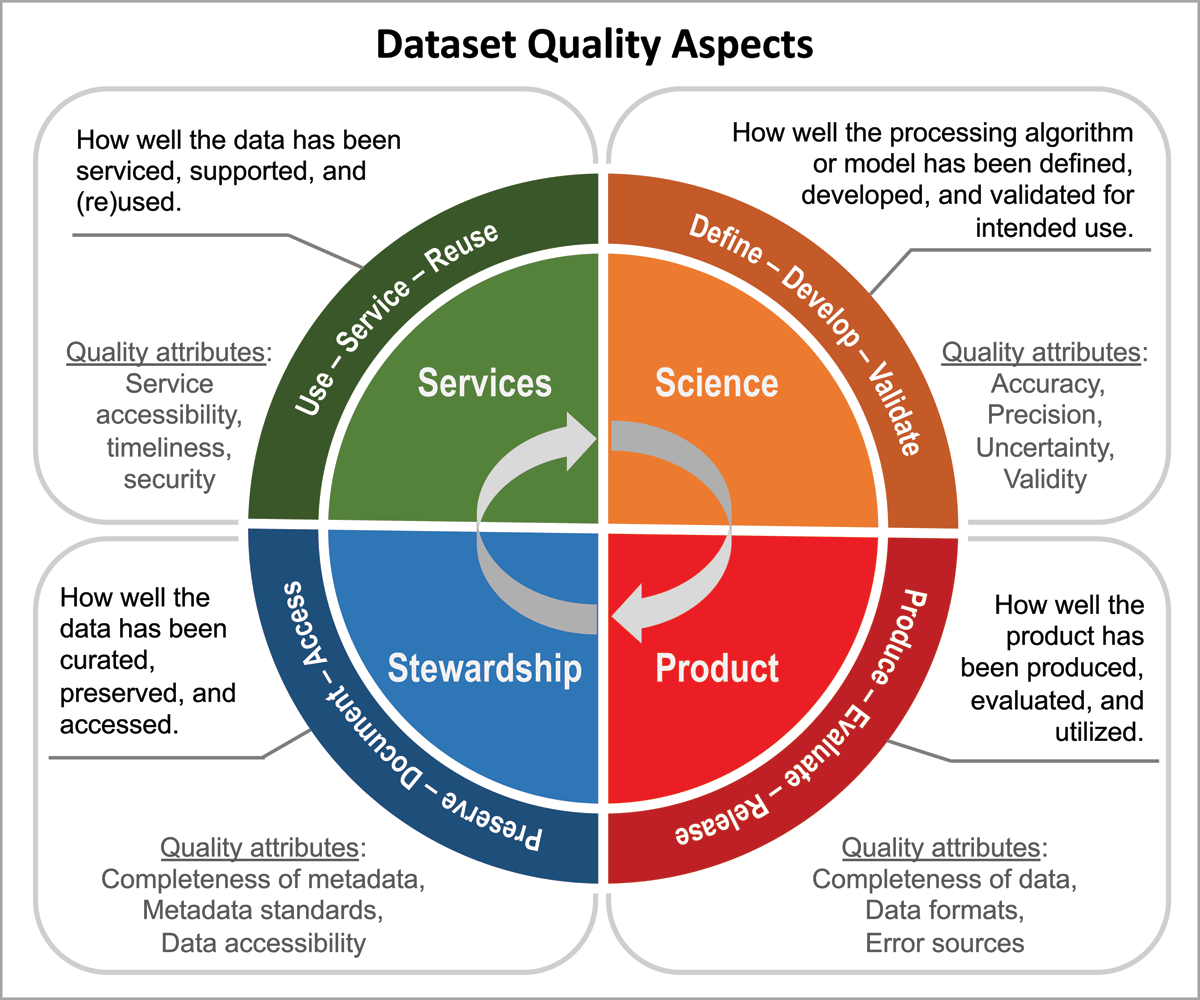

Figure 1

Brief description of four quality aspects (i.e., science, product, stewardship and service) throughout a dataset lifecycle, three key stages and a few quality attributes associated with each quality aspect (e.g., define, develop, and validate stages for the science quality aspect). The quality aspects and associated stages are based on Ramapriyan et al. (2017) with the following changes, based on feedback from the ESIP community and the International FAIR Dataset Quality Information (DQI) Community Guidelines Working Group: i) ‘Assess’ replaced by ‘Evaluate’ in the Product aspect; ii) ‘Deliver’ replaced by ‘Release’ in the Product aspect; and iii) ‘Maintain’ replaced by ‘Document’ in the Stewardship aspect. Additionally, completeness of metadata is moved from the Product to Stewardship aspect. Creator: Ge Peng; Contributors to conceptualization: Lesley Wyborn and Robert R. Downs.

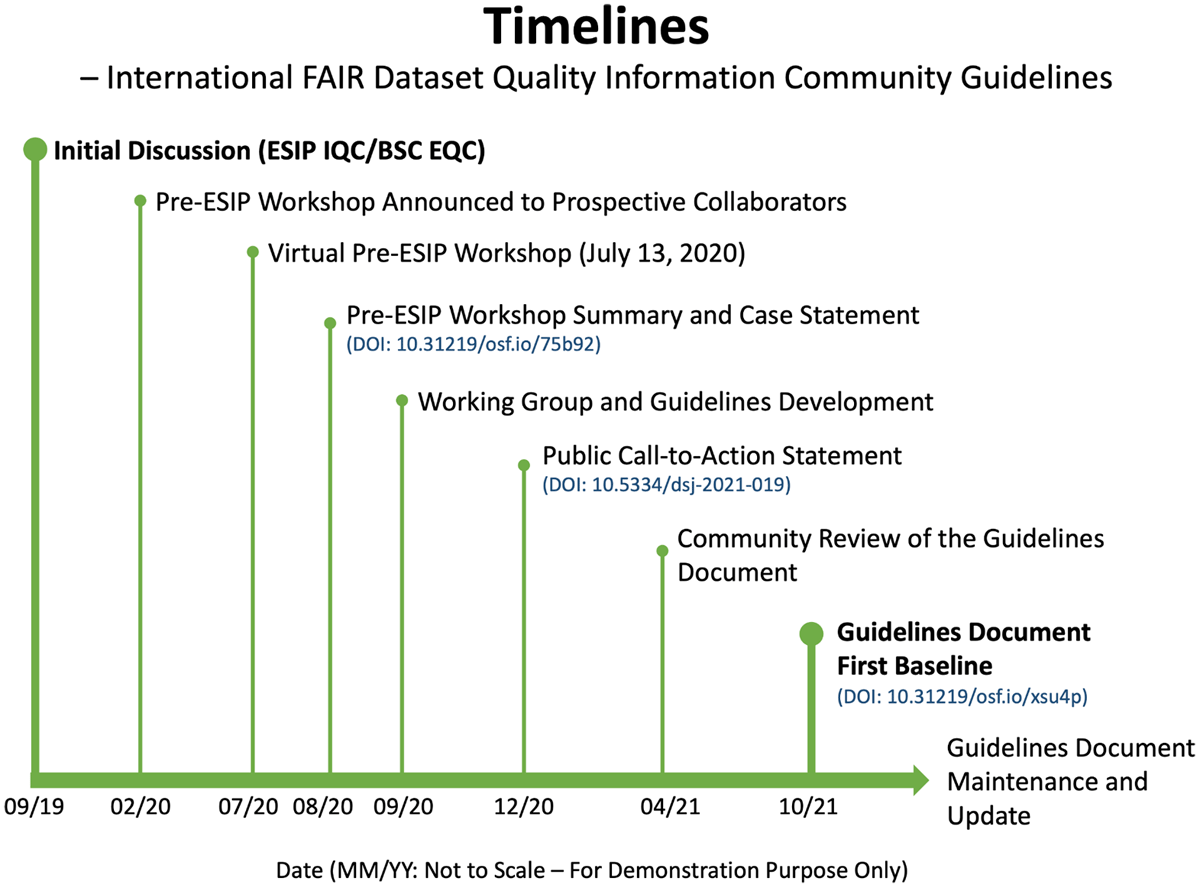

Figure 2

Schematic diagram of timelines of the initiation, planning, development, community review, and first baseline of the guidelines document. The guidelines document will be updated in the future to improve its coverage in diverse disciplines. ESIP IQC: Information Quality Cluster of the Earth Science Information Partners. BSC EQC: Barcelona Supercomputing Center (BSC) Evaluation and Quality Control (EQC) team.

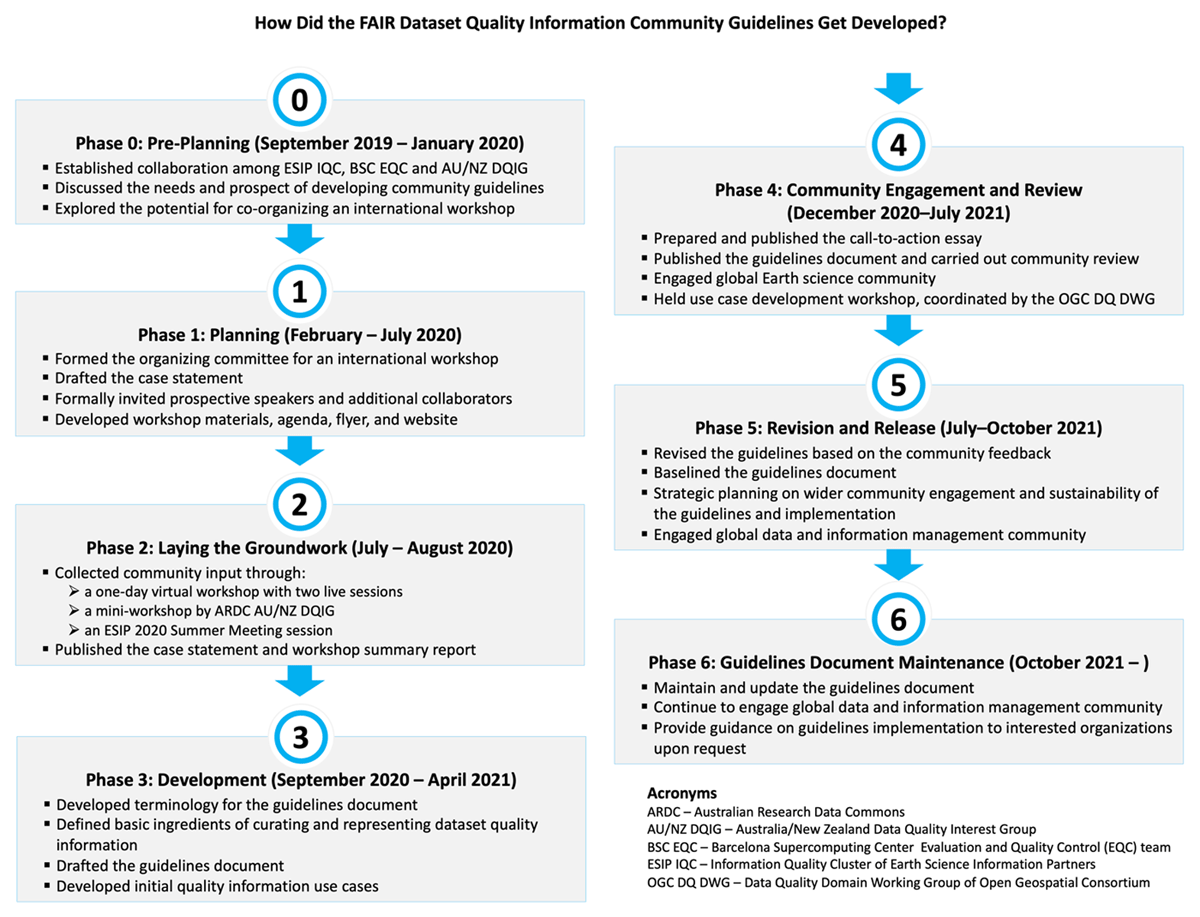

Figure 3

Flowchart outlining different phases of the guidelines development process, including the initiation, planning, development, community review and engagement, and baseline of the guidelines.

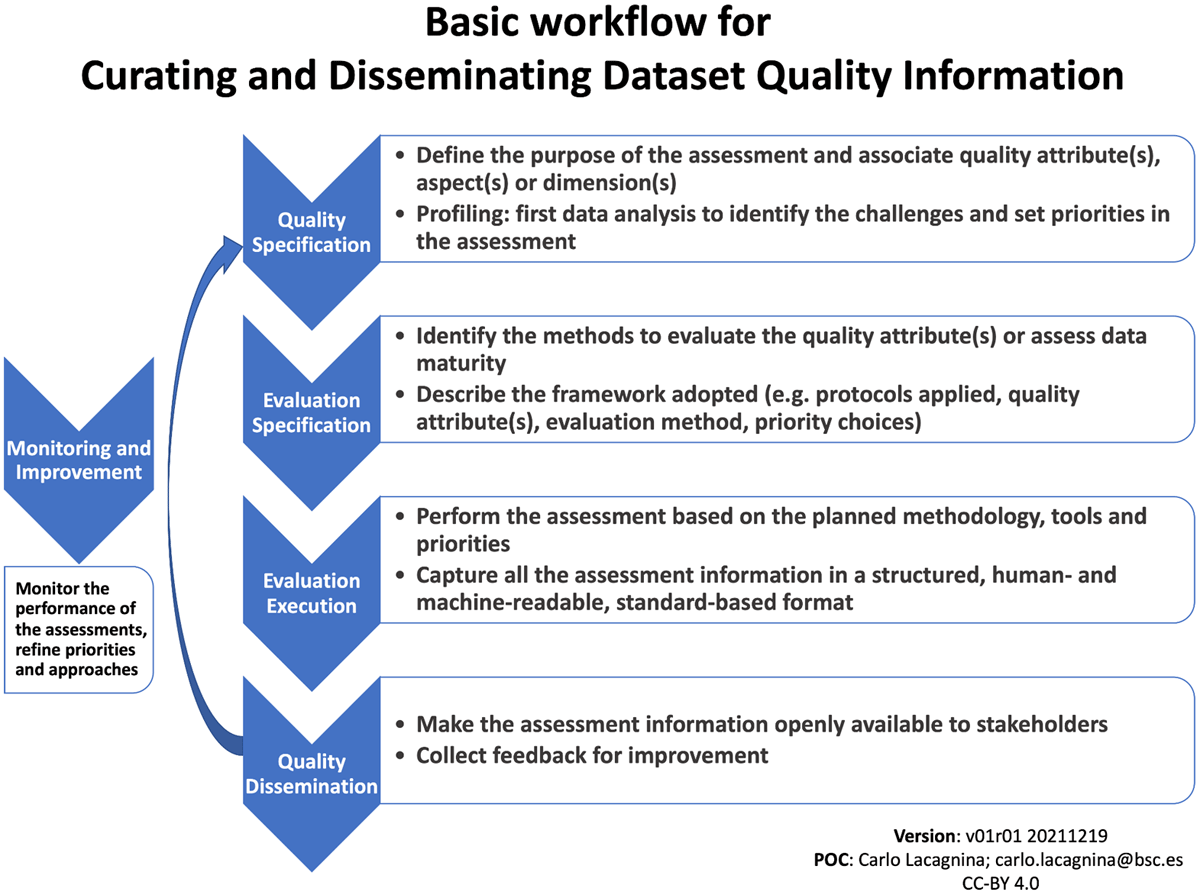

Figure 4

A schematic diagram of a basic workflow with relevant elements for curating and disseminating dataset quality information. Creator: Carlo Lacagnina. Contributor: Ge Peng.

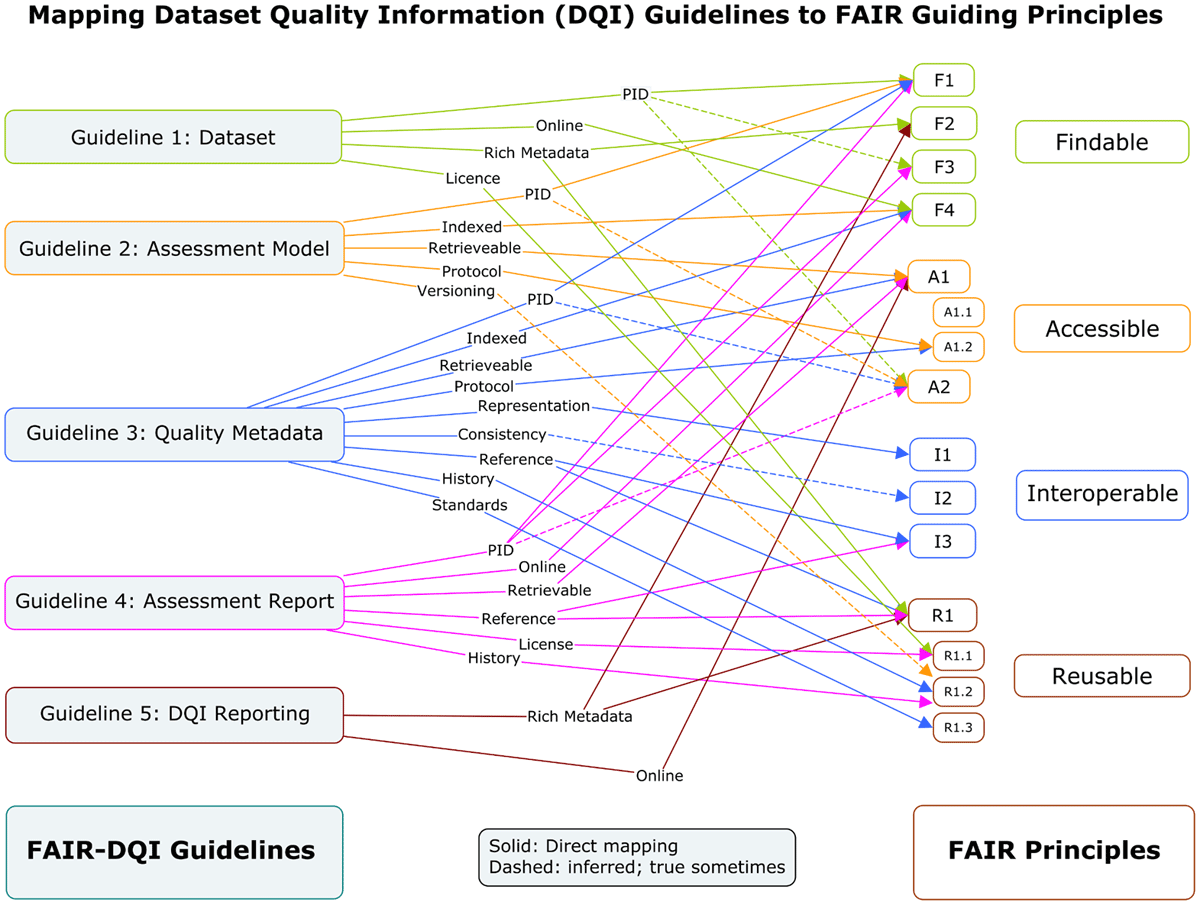

Figure 5

Diagram mapping the guidelines to the FAIR Guiding Principles as defined in Wilkinson et al. (2016).5 Solid lines represent direct mapping while the dashed lines represent indirect or weak mapping that are either inferred or may not always hold. {F, A, I, R}n denotes the nth element of the findable, accessible, interoperable, and reusable principles, respectively. Based on Table 1 in Peng et al. (2021b), with additional weak mappings represented by the dashed lines. Creator: Ge Peng. Contributor: Anette Ganske.

Table 1

Examples of dataset quality assessment models and their compliance with Guideline 2.

| ASSESSMENT MODEL | SCIENTIFIC DATA STEWARDSHIP MATURITY MATRIX (PENG ET AL. 2015) | STEWARDSHIP MATURITY MATRIX FOR CLIMATE DATA (PENG ET AL. 2019b) | FAIR DATA MATURITY MODEL (RDA FAIR DATA MATURITY MODEL WORKING GROUP 2020) | METADATA QUALITY FRAMEWORK (BUGBEE ET AL. 2021) | DATA QUALITY ANALYSES AND QUALITY CONTROL FRAMEWORK (WOO & GOURCUFF 2021) |

|---|---|---|---|---|---|

| Quality Entity (i.e., attribute, aspect, or dimension) | Stewardship | Stewardship | FAIRness | Metadata | Data |

| 2.1 – Publicly Available | Yes | Yes | Yes | Yes | Yes |

| 2.1 – PID | DOI | DOI | DOI | DOI | DOI |

| 2.2 – Indexed | Data Science Journal | Figshare | Zenodo | Data Science Journal | Integrated Marine Observing System Catalog |

| 2.3 – Retrievable Using Free, Open, Standard-Based Protocol | Yes | Yes | Yes | Yes | Yes |

Table 2

Examples of representing quality entities, assessment models and assessment results in machine-readable quality metadata and their compliance with Guideline 3.

| QUALITY METADATA FRAMEWORK | NOAA ONESTOP DSMM QUALITY METADATA (PENG ET AL. 2019A) | ATMODAT MATURITY INDICATOR (HEYDEBRECK ET AL. 2020) | METADATAFROMGEODATA (WAGNER ET AL. 2021) |

|---|---|---|---|

| Quality Entity | Stewardship | Any Quality Entity | Data and Metadata |

| 3.1 – Semantically and Structurally Consistent | Yes | Yes | Yes |

| 3.1 – Metadata Framework/Schema | International | Domain | Domain |

| 3.2 – Quality Entity Description | Yes | Yes | Yes |

| 3.3 – Assessment Method/Structure Description | Yes | Yes | Partly (contains evaluation of quality description and not description of quality assessment) |

| 3.4 – Assessment Results Description | Yes | Yes | Yes |

| 3.5 – Versioning and the History of the Assessments | Yes | Versioning | Creation & Last Update Dates |

Table 3

Examples of human-readable dataset quality assessment reports and their compliance with Guideline 4.

| QUALITY REPORT | LEMIEUX ET AL. (2017) | HÖCK ET AL. (2020) | COWLEY (2021) |

|---|---|---|---|

| Quality Entity | Stewardship | Data | Data |

| 4.1 – Follow Template | Yes | Yes | Yes |

| 4 – Quality Entity Description | Yes | Yes | Yes |

| 4 – Assessment Method Description | Yes | Yes | Yes |

| 4 – Assessment Results Description | Yes | Yes | Yes |

| 4.2 – License | Yes | Yes | Yes |

| 4.2 – Assessment History | Yes | Yes | Yes |

| 4.3 – Linked Report PID | Yes | No | Yes |

Table 4

Examples of disseminating assessment results online and their compliance with Guideline 5.

| ONLINE PORTAL | JPSS DATA PRODUCT ALGORITHM MATURITY PORTAL6 | C3S CLIMATE DATA STORE DATASET QUALITY ASSESSMENT PORTAL7 | ROLLINGDECK TO REPOSITORY (R2R) QA DASHBOARD8 |

|---|---|---|---|

| Quality Entity | Algorithm | Technical and Scientific Quality | Sensor |

| 5 – Report information in an organized way | Yes | Yes | Yes |

| 5.1 – Dataset Description | Minimal | Yes | Minimal |

| 5.2 – Assessed Quality Entity Description | Yes | Yes | Yes |

| 5.3 – Evaluation Method and Review Process Description | Yes | Yes | Yes |

| 5.4 – Description of How to Understand and Use Description | Some | Some | Minimal |