If we consider research work as a sequential process guided by the documentary records thus generated, then the researcher’s work can be seen as constituting five principal stages: learning, elaborating, communicating, depositing, and disseminating.

In the learning phase, the researcher acquires information via specific courses, conferences, work meetings, and by reading scientific publications. In the following phase, that of elaboration, they obtain information using a range of different research methods (e.g. laboratory work, fieldwork, and documentary work). This information (raw or elaborated) is then recorded, and communicated by means of a variety of scholarly outputs.

Some outputs undergo scholarly research quality assurance and assessment (i.e. peer-review) to validate their conclusions, methods, and claims, prior to their communication via formal publication (Tennant & Ross-Hellauer, 2020). Peer-reviewed publications are considered the most authoritative and recognizable research output (Huang & Chang, 2008) and are broadly used in research evaluation tasks as signals of scholarly reputation (Nicholas et al., 2015; Petersen et al., 2014). These publications, moreover, serve an archival function, preserving and making subsequent retrieval possible (Garvey & Griffith, 1972).

Other outputs are shared privately with colleagues or publicly published outside the scholarly publishing circuit. Non-reviewed documents comprise material supporting peer-reviewed publications (e.g. manuscript drafts, methodological designs, raw data, and scientific software), discarded material (e.g. personal notes, negative results, and preliminary designs), early material (e.g. research proposals), and professional publications (e.g. reports, working papers, technical papers, comments, blog posts, and opinion papers).

Scholarly output can subsequently be deposited on online platforms (e.g. publisher websites, personal websites, bibliographic databases, and repositories) for preservation and where their dissemination is ultimately enabled via different media. This last step might involve elaborating additional non-reviewed promotional documents (e.g. press releases, social media posts, informative videos, and infographics).

The advent of the academic web and social networking sites has facilitated the sharing of nonreviewed documents by researchers (Barjak, 2006; Priem, 2013). However, their collection and quantitative analysis via Altmetrics aggregators raises various challenges, as many non-reviewed documents lack persistent identifiers, as they have not gone through a formal publication process managed by publishers, bibliographic databases, or libraries. Thus, both their diffusion and traceability are still limited.

Many repositories allow the inclusion of non-reviewed documents, such as conference presentations, datasets, reports, and images, assigning them with persistent identifiers (e.g. handles). However, this practice does not solve this problem. First, the inclusion of non-reviewed documents depends on policy guidelines, which can differ significantly from one repository to another. Second, repositories are filtered thematically or institutionally, making global analysis unfeasible. And, third, for various reasons – including ignorance or a preference for other external services – many researchers do not use the services offered by repositories (Borrego, 2017).

Among these external services, the role played by academic social networking sites stands out (Jordan, 2014, 2019; Ortega, 2016; Van Noorden, 2014), especially that of ResearchGate (RG). This networking site allows its users to deposit a wide variety of non-reviewed documents and to generate persistent identifiers free of charge using the digital object identifier (DOI) standard (henceforth RG-DOIs), thereby facilitating the dissemination and citation of academic documents that have not been published via formal scientific communication channels, and taking advantage of RG services for their dissemination and monitoring.

Determining the quantity and basic bibliographic properties of the documents deposited in RG and identified with an RG-DOI (i.e. RG-DOI publications) could provide insights into how these informal publications are shared and disseminated, as well as unveiling their scholarly and non-scholarly impact. Nonetheless, using RG for bibliographic analysis poses two significant challenges: First, massive access to data is compromised since there is no API to collect metadata; and, second, the metadata quality might be limited, as users describe their publications without any bibliographic controls (Orduña-Malea & Delgado López-Cózar, 2020).

This study aims to describe the characteristics of this gray literature, while seeking to address obvious data collection challenges and descriptive limitations. To do so, the following research questions are raised:

- RQ1.

How many RG-DOIs have been assigned over time?

- RQ2.

What are the bibliographic properties of RG-DOI publications?

- RQ3.

What is the metadata accuracy of RG-DOI publications?

- RQ4.

What are the scholarly and non-scholarly impact of RG-DOI publications?

ResearchGate was created in 2008 with the aim of facilitating interconnectivity between academics via different social functionalities, both free and paid (Orduña-Malea & Delgado López-Cózar, 2020).

Among the free functionalities, the most notable are the deposit and dissemination of academic works, the collection of scholarly metrics, asynchronous messaging among researchers, a Q&A service, alerts, and job offers, while among the paid functionalities, we find marketing services (sponsored content, sponsored email, display ads, native ads, and event promotions), branding (publisher solutions), and recruitment (job postings).

According to official information delivered by ResearchGate,① as of December 12, 2023, the platform covers 156 million publications and is used by 23 million registered users, mainly from Europe (33%) and Asia (28%), who specialized mainly in engineering (19%), medicine (15%), and biology (13%). The service receives 297 million monthly views on its publication pages, making ResearchGate a relevant platform for carrying out quantitative science studies.

The scientific literature on RG has grown significantly since 2015 (Prieto-Gutierrez, 2019) and has tended to focus on three main aspects: scholarly impact, individual and collective scholarly practices, and social and reputational features (Manca, 2018).

In the case of scholarly impact, ResearchGate has been characterized as a platform with distinctive publication and citation coverage, differing from other bibliographic databases (Singh et al., 2022), indexing a remarkable number of recent publications (Thelwall & Kousha, 2017b) and academic gray literature (Nicholas et al., 2016b), with biases towards experimental science disciplines (Thelwall & Kousha, 2017a), and exhibiting higher visibility for research institutions with a high number of academic staff (Lepori et al., 2018). In this line of research, we find studies on academic publications (Thelwall & Kousha, 2017a), universities (Lepori et al., 2018; Yan et al., 2018; Yan & Zhang, 2018), open data (Raffaghelli & Manca, 2023), and researchers, the latter of which is filtered by research organizations (Mikki et al., 2015; Ortega, 2015) and disciplines (Orduna-Malea & Delgado López-Cózar, 2017; Yan et al., 2021).

The study of scholarly impact has also evidenced the existence of a variety of indicators, where citation-based metrics tend to correlate strongly, not only within ResearchGate but also across platforms, but achieve a lower correlation with alternative metrics (Martín-Martín et al., 2018; Mikki et al., 2015), reflecting the dependency on users’ activity within the platform. Otherwise, RG’s indicators (e.g. RG Score) have been widely criticized for their opacity, lack of reproducibility, and susceptibility to manipulation by fraudulent behaviors aimed at achieving academic status (Copiello, 2019; Copiello & Bonifaci, 2018, 2019; Kirilova & Zoepfl, 2025; Kraker & Lex, 2015; Orduna-Malea et al., 2017; Yu et al., 2016).

Regarding scholarly practices, ResearchGate has been characterized as a platform with a significant representation of early career researchers, a strong bias towards engineering and biomedicine, and an underrepresentation of female authors (Mikki et al., 2015; Ortega, 2017).

While the literature highlights three general ResearchGate user types (information source users, friend users, and information seeker users) (Yan et al., 2018), the platform is mainly used for knowledge sharing, especially using collaborative Q&A strategies (Ebrahimzadeh et al., 2020; Jeng et al., 2017; Li et al., 2018), accessing literature for personal consumption (Mason & Sakurai, 2021), posting English-language outputs, and featuring publications recently published, highly cited, and published by reputable sources (Liu & Fang, 2018), often infringing copyright issues (Borrego, 2017; Jamali, 2017; Lee et al., 2019).

However, despite the advantages of the platform, most users do not use their RG account heavily, spending little time searching and updating their profiles (Meier & Tunger, 2018), and perceiving negative feelings related to productivity and stress (Muscanell & Utz, 2017).

In the case of platform features, the literature has analyzed ResearchGate as a driver of scholarly reputation, through the availability of academic social networking features (e.g. open review, following users or projects, and sending emails), reputational indicators (e.g. RG Score, Reads, and Recommendations), and marketing-based mechanisms (e.g. e-mails informing users on their peers’ contributions, activities, and achievements) (Nicholas et al., 2016a, 2016b; Orduna-Malea et al., 2016; Orduña-Malea & Delgado López-Cózar, 2020) aimed all of them to show academic research as a game to motivate and promote users’ participation (Hammarfelt et al., 2016). Specifically, ResearchGate excels in its analytical capabilities, interactivity, and ease of publication deposit, while its search, browsing, site navigation, and export functions are weaker (Bhardwaj, 2017).

Among its features, ResearchGate launched the free DOI service on August 13, 2014, to allow its users to showcase their research, make it citable, and date their discoveries (ResearchGate, 2014). DOIs created by RG (RG-DOIs) are generated at the user’s request once the publication has been deposited on the platform. This option is available on the “More” tab and is only available for certain document types. The system is automatic and instantaneous, generating a permanent identifier registered by Datacite. The RG-DOIs are embedded in doi.org and rgdoi.net domain names, with “10.13140” as the DOI prefix.

This functionality might influence how researchers share their unpublished work, while impacting the competition between RG and institutional repositories in their role as agents for the preservation of digital scholarly documents. It also raises the question as to whether RG should be considered a “publisher” (Konkiel, 2014).

Given that users directly request DOIs when depositing their work in RG, an analysis of RG-DOIs would facilitate the study of an unknown dimension of DOIs, that is, their use to identify and describe scholarly publications either unpublished or originally published without a DOI.

First, the DOI Resolver was used to determine the registration agency that generates RG-DOIs. For this purpose, a random RG-DOI test was used via HTTPS,② returning DataCite as the registration agency. A Python script was then designed to recover all findable DOIs generated by RG using the DataCite REST API.③

The query returned 1,092,934 RG-DOIs registered as of May 21, 2023. For each RG-DOI, the DataCite REST API response included all available bibliographic metadata of the corresponding RG publication in the JSON format. Python scripts were subsequently built to extract the metadata obtained. The metadata fields eventually analyzed here were the registration date, publication date, language, and Resource Type.

The number of citations from formally published publications determined the citation-based scientific impact of publications identified with RG-DOIs. The Web of Science’s Cited References database was searched through the “cited DOI” search field, using the ResearchGate DOI prefix as a string and the asterisk as Wild Card (i.e. “10.13140*”). This process was carried out on May 22, 2023, obtaining 24,123 records. A manual data cleaning was then carried out to eliminate broken or invalid DOIs, obtaining 21,092 unique RG-DOIs.

Altmetrics mentions were used to determine the non-scholarly impact of the publications with RG-DOIs. A Python script was again designed to collect all RG-DOI mentions using Elsevier’s PlumX Metrics API.④

It was decided to carry out two data collections (May 22 and October 22, 2023) to determine the coverage of RG-DOIs in PlumX with greater robustness. All statistics were performed with the most recent data (October). However, the data from May were used to make internal comparisons between the data collections.

PlumX only provides the raw number of Mendeley Readers, so an additional script was created to collect data about Readers’ roles through the Mendeley API.⑤ Data were collected on October 29, 2023, yielding 7,603 records with Readers’ data.

Given that RG users directly deposit and describe their publications, we need to determine how accurate the metadata provided by DataCite is. A random sample of 666 RG-DOIs (99% confidence interval with a margin of error of 5%) was taken to check the metadata accuracy.

The author manually checked the publication date and document type in the DataCite records (resourceType field), RG’s publication page, and the document’s full text for each RG-DOI. This process was carried out between October 12 and October 15, 2023.

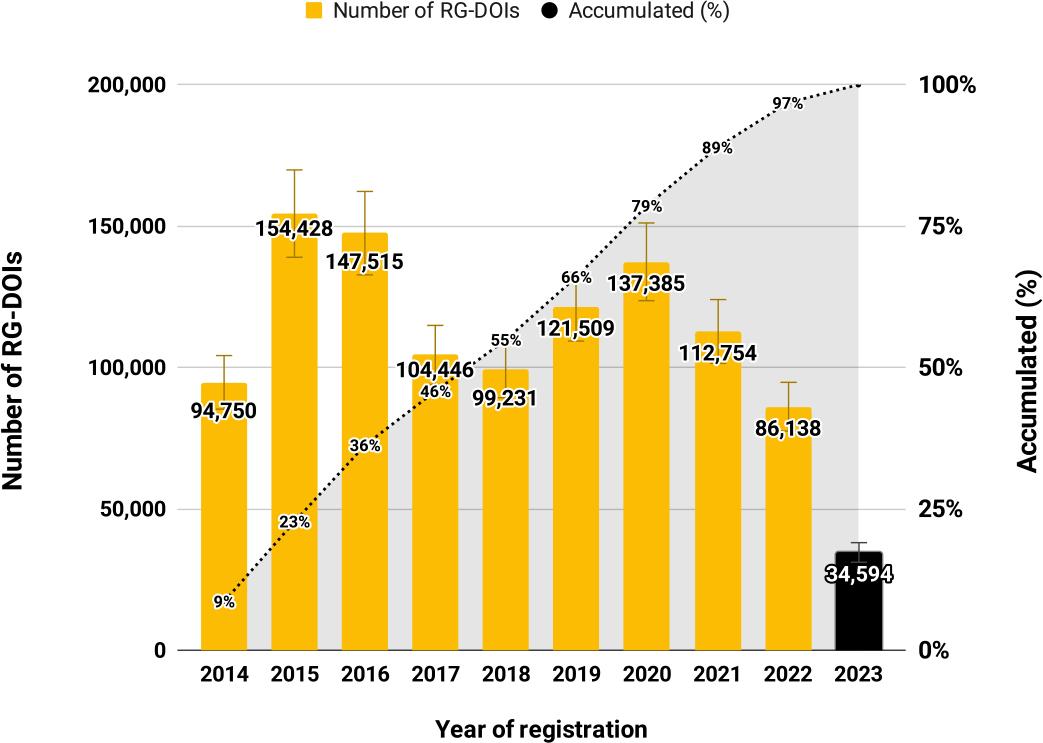

A total of 1,092,934 RG-DOIs have been registered as of May 21, 2023 (0.6% of all publications deposited in RG) since the launch of the DOI generation service in 2014. The two years following its implementation recorded the highest annual number of registrations (154,428 and 147,515 RG-DOIs, respectively). However, starting in 2016, the number of registrations decreased sharply, before rising again in 2019 and 2020 due to the increase in platform use during the COVID-19 lockdown (ResearchGate, 2021). Since then, the number of RG-DOIs has once again fallen, with 2022 registering the lowest annual value since the service was launched, with 86,138 new RG-DOIs (Figure 1).

Number of RG-DOIs registered over time. Note: 2023 is incomplete; 184 records appear without the year of registration in the metadata field.

The years with the most publications correspond to 2015 (123,396), 2016 (105,489), 2019 (103,461), and 2020 (100,929), which coincide with the years recording the most RG-DOI registrations (see Figure 1). However, 332 records have been identified without a publication date, all with a registration date of 2016.

The publication date of 78.53% of the RG-DOI registrations lies between 2014 and 2023. The remaining 21.4% (234,342 RG publications)–a significant percentage–correspond to previous publications, except 2,874 records for which the publication date is later than the RG-DOI registration date.

More specifically, the RG-DOI registration date does not coincide with the publication date in 43.8% of the records. These data were especially at variance in 2014 (the first year of the RG-DOI service), when the discrepancy reached 65.6% (Table 1).

Differences between the registration and publication dates.

| Year of Registration | RG-DOIs with other year of publication | % | Difference (Avg.) | RG-DOIs with same year of publication | % | Total RG-DOI publications |

|---|---|---|---|---|---|---|

| 2014 | 62,114 | 65.6 | 5.9 | 32,636 | 34.4 | 94,750 |

| 2015 | 72,265 | 46.8 | 6.3 | 82,164 | 53.2 | 154,429 |

| 2016 | 75,617 | 51.3 | 5.7 | 71,900 | 48.7 | 147,517 |

| 2017 | 48,624 | 46.6 | 5.3 | 55,822 | 53.4 | 104,446 |

| 2018 | 37,188 | 37.5 | 5.3 | 62,043 | 62.5 | 99,231 |

| 2019 | 46,372 | 38.2 | 5.4 | 75,137 | 61.8 | 121,509 |

| 2020 | 53,984 | 39.3 | 5.0 | 83,401 | 60.7 | 137,385 |

| 2021 | 39,705 | 35.2 | 5.0 | 73,049 | 64.8 | 112,754 |

| 2022 | 28,579 | 33.2 | 4.6 | 57,559 | 66.8 | 86,138 |

| 2023 | 14,121 | 40.8 | 4.4 | 20,473 | 59.2 | 34,594 |

| Null | N/A | N/A | N/A | N/A | N/A | 181 |

| All | 478,750 | 43.8 | 5.2 | 614,184 | 56.2 | 1,092,934 |

Note: N/A: data not available.

Nineteen different document types with at least one registered RG-DOI have been identified (Table 2), among which Posters (13%), Presentations (12.6%), and Conference papers (10.8%) are most evident. However, there is no information on the document type for a significant number of records (26.1%).

Number of RG-DOI publications according to document type.

| Document type | RG-DOI publications | % |

|---|---|---|

| Null | 284,985 | 26.1 |

| Poster | 141,725 | 13.0 |

| Presentation | 137,372 | 12.6 |

| Conference Paper | 118,238 | 10.8 |

| Preprint | 101,356 | 9.3 |

| Thesis | 86,767 | 7.9 |

| Technical Report | 78,429 | 7.2 |

| Other | 66,513 | 6.1 |

| Research Proposal | 16,452 | 1.5 |

| Working Paper | 13,526 | 1.2 |

| Book | 13,231 | 1.2 |

| Experiment Findings | 11,926 | 1.1 |

| Method | 9,375 | 0.9 |

| Publication | 6,471 | 0.6 |

| Code | 4,570 | 0.4 |

| Chapter | 1,425 | 0.1 |

| Negative Results | 218 | 0.0 |

| Patent | 157 | 0.0 |

| Article | 143 | 0.0 |

| Cover Page | 55 | 0.0 |

| Total | 1,092,934 | 100 |

Note: Categories based on the “ResourceType” metadata field.

On the other hand, a minority presence of formal document types is observed, such as Articles and Chapters, which do not necessarily have a DOI (Khurana et al., 2023).

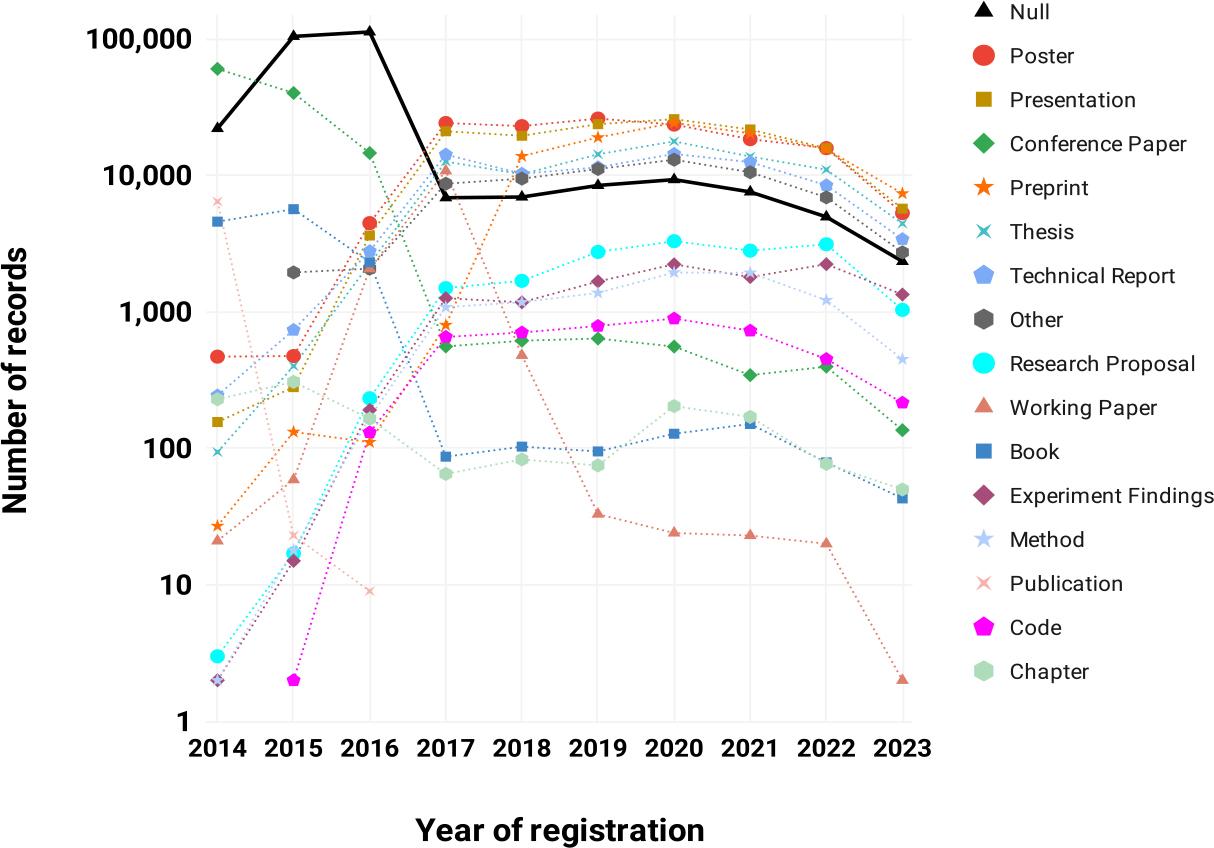

Considering the last full year available (2022), the most common types are Posters, Presentations, Preprints, and Doctoral Theses (Figure 2). Indeed, these first two types have experienced significant growth over the years.

Number of RG-registered DOIs per document type over time. Note: The year 2023 is incomplete.

Anomalous behavior was detected in 2017, with the number of some document types growing significantly from 2016 to 2017. These included Other (from 2,073 to 8,686 publications), Posters (from 4,469 to 24,176), Presentations (from 3,627 to 21,039), and Working Papers (from 2,067 to 10,797). However, the number of records decreased abruptly in the case of other document types, including Conference Papers (from 14,579 to 558) and, most remarkably, those publications without a defined document type (from 112,319 to 6,856).

DataCite provides language information for 67.5% of total publications with RG-DOI, identifying up to 26 different languages, with English being the predominant language (74.2%), followed at some distance by Spanish (7.2%) and German (4.6%). If we compare these results with the distribution of languages for publications indexed in Scopus (Table 3), a number of significant differences can be observed. On the one hand, some languages appear overrepresented in RG (e.g. Catalan, Indonesian, Malay Portuguese, Romanian, Slovak, and Spanish). In contrast, other languages are absent (e.g. Chinese, Greek, Japanese, Korean, Russian, and Turkish).

Number of publications with RG-DOIs according to language.

| Language | ResearchGate | Scopus | ||

|---|---|---|---|---|

| Number of RG-DOI publications | (%) | Number of publications | (%) | |

| English | 549,356 | 74.44 | 82,061,257 | 87.45 |

| Spanish | 53,403 | 7.24 | 946,960 | 1.01 |

| German | 33,689 | 4.57 | 2,673,428 | 2.85 |

| Portuguese | 26,097 | 3.54 | 354,693 | 0.38 |

| French | 18,012 | 2.44 | 1,511,694 | 1.61 |

| Catalan | 11,364 | 1.54 | 5,415 | 0.01 |

| Indonesian | 6,695 | 0.91 | 2,755 | 0.00 |

| Malay | 6,569 | 0.89 | 2,137 | 0.00 |

| Italian | 6,351 | 0.86 | 542,782 | 0.58 |

| Romanian | 5,731 | 0.78 | 46,341 | 0.05 |

| Slovak | 3,880 | 0.53 | 37,182 | 0.04 |

| Dutch | 2,469 | 0.33 | 154,781 | 0.16 |

| Swedish | 2,138 | 0.29 | 74,894 | 0.08 |

| Danish | 2,092 | 0.28 | 68,904 | 0.07 |

| Hungarian | 1,939 | 0.26 | 73,064 | 0.08 |

| Esperanto | 1,854 | 0.25 | 170 | 0.00 |

| Other | 6,322 | 0.86 | 5,286,659 | 5,63 |

| Total | 1,092,934 | 100 | 93,843,116 | 100 |

Note: Only languages with at least 1,000 records for publications with RG-DOIs.

For the random sample of 666 documents, the accuracy of the publication date of DataCite records was verified by comparing the date indicated in DataCite’s metadata with the date stated in the document itself. Five RG-DOIs were removed from the analysis as they were no longer registered in DataCite. For the remaining 661 documents, the date was correct in 46.9% of cases and incorrect in 8.6% (most notably the case of Presentations and Theses). However, 44.1% of the documents could not be verified as the date was not indicated in the full text of the deposited document. The absence of a publication date was especially apparent in Posters (58 undated publications), Presentations (35), Conference Papers (34), and Preprints (31), as well as in publications without an assigned category (87). In the case of datasets, even though the date was the same as the deposit date in some cases, there is no guarantee that this dataset had not been created earlier and deposited on some other platform.

In the case of the precision in the identification of document type, for 34.6% of the publications there was no correspondence between the document type indicated in DataCite’s metadata and the type displayed on the publication page. This discrepancy is fundamentally attributable to publications without a type in DataCite (i.e. Null). After accessing the publication page of said RG-DOIs, the author was able to verify that these documents were labeled as Data (45 documents) and Research (43). It should be stressed that the “Research” tag does not appear among the document types that users can employ to categorize publications on RG, nor does it appear as a document type in DataCite.

Likewise, no agreement was found between the label assigned to the publication page and the actual document type, identified after a manual inspection of the full text. In this case, a lack of agreement was detected for 40.5% of the publications in the sample. The errors concentrated in the Research (85 incorrectly assigned documents, of which 26 were preprints, 17 articles, and 13 Reports), Conference Papers (35 incorrectly assigned documents, of which 17 were preprints and 10 posters), and Presentation (20 misassigned documents, 11 of which were preprints) labels.

Agreement between the typology indicated in the metadata and the actual document type was low (43.4%), mainly as a result of documents not being categorized or being categorized as Other. Many discrepancies were also found for the Conference Paper type (40 documents incorrectly categorized, of which 18 were preprints, 10 posters, and 6 presentations).

If we exclude those documents that were not categorized in DataCite, the agreement percentages in the case of document typology increase significantly, especially between DataCite and the Publication page, as can be seen in Table 4.

Percentage of agreement between the actual document type, the document type indicated in DataCite, and the document type displayed on the publication page.

| Metadata | Page | Real | ||||

|---|---|---|---|---|---|---|

| All N= 661 | Without null N= 485 | All N= 661 | Without null N= 485 | All N= 661 | Without null N= 485 | |

| Metadata | -- | -- | 65.4% | 90% | 43.4% | 59.8% |

| Page | 65.4% | 90% | -- | -- | 59.5% | 61.7% |

| Real | 43.4% | 59.8% | 59.5% | 61.7% | -- | -- |

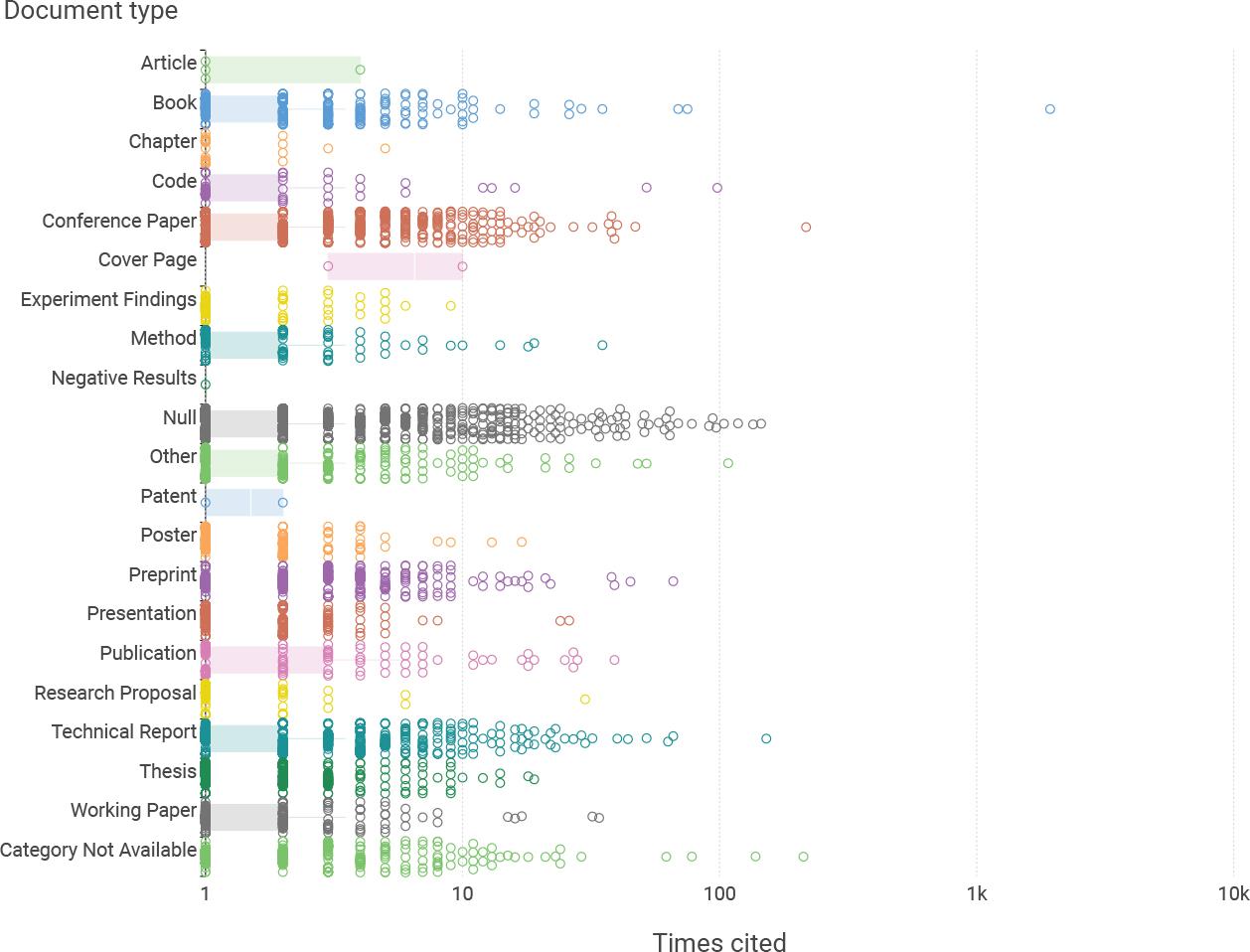

The number of RG-DOI publications cited by publications indexed in WoS stands at 20,954 (1.92% of the total RG-DOIs identified), accumulating a total of 41,492 citations. The most cited publication is a book cited 1,932 times (Popay et al., 2006). The average number of citations to these publications is low (1.98), exhibiting a non-linear distribution, in which the 10 publications with the most citations concentrate 7.9% of the citations to RG-DOIs, while 73.3% of the RG-DOI publications receive one citation each.

In absolute terms, Books, Conference Papers, Technical Reports, and Theses are the most frequently cited document types, along with preprints (Table 5). Otherwise, despite being the most frequent typologies with RG-DOI, only 0.68% of Posters and 0.60% of Presentations are cited at least once. In addition, the significant average number of citations received by Code stands out, despite the low number of RG-DOIs assigned to this category (74).

Number of documents with RG-DOI cited by WoS publications.

| Document type | Number of records | % | Citations Average | Citations Median | Citations Max |

|---|---|---|---|---|---|

| Null | 5,603 | 1.97 | 2.15 | 1.00 | 145 |

| Conference Paper | 3,357 | 2.84 | 1.89 | 1.00 | 217 |

| Technical Report | 2,936 | 3.74 | 1.90 | 1.00 | 152 |

| Preprint | 1,978 | 1.95 | 1.65 | 1.00 | 66 |

| Thesis | 1,334 | 1.54 | 1.41 | 1.00 | 19 |

| Other | 984 | 1.48 | 1.94 | 1.00 | 108 |

| Poster | 962 | 0.68 | 1.19 | 1.00 | 17 |

| Presentation | 839 | 0.61 | 1.28 | 1.00 | 26 |

| Book | 624 | 4.72 | 5.37 | 1.00 | 1,932 |

| Working Paper | 375 | 2.77 | 1.78 | 1.00 | 34 |

| Method | 190 | 2.03 | 2.04 | 1.00 | 35 |

| Experiment Findings | 134 | 1.12 | 1.49 | 1.00 | 9 |

| Publication | 133 | 2.06 | 3.84 | 1.00 | 39 |

| Research Proposal | 102 | 0.62 | 1.55 | 1.00 | 30 |

| Code | 74 | 1.62 | 4.03 | 1.00 | 98 |

| Chapter | 30 | 2.11 | 1.33 | 1.00 | 5 |

| Article | 4 | 2.80 | 1.75 | 1.00 | 4 |

| Cover Page | 2 | 3.64 | 6.50 | 6.50 | 10 |

| Patent | 2 | 1.27 | 1.50 | 1.50 | 2 |

| Negative Results | 1 | 0.46 | 1.00 | 1.00 | 1 |

In any case, the low median values (1 in almost all document types) reflect that most publications accrued just one citation, which a few publications surpassing 100 citations, as the boxplot displayed in Figure 3 shows.

Number of citations received by RG-registered DOIs per document type.

In the case of alternative metrics, only 0.9% (10,335) of the RG-DOIs have received at least one mention. In absolute terms, Conference Papers and, to a lesser extent, Technical Reports, are the most frequently mentioned document types. As can be seen in Table 6, the most important source of mentions is Mendeley, which accrues 81,884 Reads from 7,904 RG-DOIs, while the number of mentions from news (415 mentions to 176 RG-DOIs) and policy documents (1,418 mentions to 921 RG-DOIs) is low.

Number of records with Altmetrics performance per record type and metric.

| Record types | Total | % | News count | Readers count | Policy citations |

|---|---|---|---|---|---|

| Null | 3,725 | 1.3 | 84 | 2,690 | 428 |

| Conference Paper | 3,083 | 2.6 | 28 | 2,683 | 100 |

| Technical Report | 622 | 0.8 | 26 | 326 | 178 |

| Book | 581 | 4.4 | 5 | 436 | 55 |

| Thesis | 492 | 0.6 | 2 | 421 | 21 |

| Poster | 431 | 0.3 | 6 | 332 | 10 |

| Presentation | 386 | 0.3 | 1 | 294 | 20 |

| Preprint | 286 | 0.3 | 12 | 195 | 17 |

| Other | 275 | 0.4 | 5 | 218 | 29 |

| Working Paper | 190 | 1.4 | 3 | 107 | 48 |

| Publication | 85 | 1.3 | 1 | 52 | 7 |

| Code | 69 | 1.5 | 2 | 62 | 1 |

| Chapter | 43 | 3.0 | 0 | 41 | 1 |

| Method | 27 | 0.3 | 0 | 18 | 3 |

| Research Proposal | 19 | 0.1 | 0 | 14 | 0 |

| Experiment Findings | 15 | 0.1 | 0 | 10 | 1 |

| Article | 4 | 2.8 | 0 | 3 | 0 |

| Cover Page | 1 | 1.8 | 0 | 0 | 1 |

| Patent | 1 | 0.6 | 0 | 1 | 0 |

| TOTAL | 10,335 | 175 | 7,903 | 920 |

Note: One record may have been performed in more than one PlumX metrics. Source: PlumX.

Of the 19,885 Reads captured directly from the Mendeley API, a high percentage are determined as being attributable to students (47.7%), including undergraduates (1,798), postgraduates (3,541) and doctoral students (4,145). The rest of the Reads are divided between researchers (3,524), lecturers and professors (3,877), others (1,410), and librarians (240). The role of the readers of 262 Reads remains unspecified.

Although a significant number of registered RG-DOIs was identified (around 1 million), the number of registrations has fallen quickly in recent years. This drop in usage could reflect a decrease in the number of users on RG. However, official data⑥ actually indicate a continuous increase in users (19 million in 2020 and 22.5 million in 2021). Thus, a plausible explanation might be that users are tending to use the DOI functionality less owing to the existence of other platforms that also permit the generation of DOIs, including OSF,⑦ Zenodo,⑧ Orvium,⑨ and Figshare.⑩ In addition, institutional repositories have begun to generate DOIs for certain document types, such as theses and datasets.

While the results indicate that users took advantage of the creation of this functionality to deposit and disseminate gray academic publications, RG-DOI publications concentrated on the years when the DOI registration functionality was created, indicating a preference in RG for recent articles, an aspect previously noted by Thelwall and Kousha (2016). However, the lack of precision in the publication date data (see Section 4.3) means we need to exercise caution when dealing with the results in Table 4.

In the case of document typology, results show the relevance of publications associated with scientific events (e.g. posters, conference papers, and presentations), doctoral theses, reports (including working papers and technical reports), and preprints. However, the analysis of document types highlights a series of difficulties.

First, more than a quarter of the RG-DOIs (around 285,000 records) are not assigned a document type: that is, no information is included in the “ResourceType” metadata provided by DataCite. The absence of this field for such many records is unexpected, given that users must specify the document type (required field) at the time of deposit, and this category does appear on the publication page. It might be the case that users believe they can change the document type after registration. Yet, RG instructions explicitly state:

“Once a ResearchGate DOI has been generated for a research item, you can no longer edit that item. Instead, you should remove the research completely, re-upload it with the edits and generate a new DOI.”

However, the author was allowed to change a publication assigned as Poster with an RG-DOI to another document type, thus contradicting the official information provided by RG. This could account for the inconsistencies between the DataCite data (which do remain unchanged) and the publication page data, but it does not account for the number of Null fields.

Remarkably, 83.7% of all records without document-type metadata were accumulated between 2014 and 2016. The number of documents without the “ResourceType” metadata fell from 2017 onwards, although it remains significant to this day (4,963 records in 2022). This can probably be attributed to the platform’s internal control process. The author contacted RG to clarify this situation, but has yet to receive a response.

Second, the document typologies identified yielded additional contradictory results. The official information from RG indicates that RG-DOIs cannot be generated for Articles, Books, Chapters, Conference Papers, Cover Pages, Patents, and Posters since they are considered to have been published elsewhere and may already have a DOI. However, RG-DOIs assigned to Articles (143), Patents (157), Chapters (1,425), Books (13,231), and, most significantly, Conference Papers (118,238) and Posters (141,725) have been identified. To verify this situation, the author attempted to create RG-DOIs for each document type manually, finding it possible in the case of Posters but not for Articles, Books, Chapters, Conference Papers, Cover Pages, and Patents. It therefore seems plausible that the generation policy has been changed, with the exception of Posters, which would appear to be an error awaiting correction on RG’s website.

Third, RG’s classification, based on 18 document types, introduces various inconsistencies that jeopardize any quantitative analyzes by document type. For example, the Methods category is inappropriate since it confuses document type (e.g. Journal Article, Book chapter, and Book) with genre (e.g. Method, Bibliographic Review, Book Review, Editorial, Letter to Editor, etc.). In fact, this error is also present in many bibliographic databases. Other categories are ambiguous. For example Presentation, which might correspond to conference keynotes, teaching material, supplementary material, or even a Poster.

Fourth, DataCite’s metadata includes document types not currently available for users when describing their publications. For example, since 2017, there have been no records labeled “Publication.” The case of “Working Paper” is more complex. This document type experienced a peak in records precisely in 2017 (with 10,797 documents) and then practically disappeared and is currently unavailable as a document type. However, DataCite assigned the “Working Paper” label to 20 records in 2022. The type “Other” (with 6,900 records in 2022) is also present in DataCite but is unavailable for users. In addition, the “Research” category is unavailable for users when describing their publications but appears as a document-type label in RG’s publication pages.

Fifth, the changes recorded in 2017 can be attributed to the fact that five publishers (American Chemical Society, Brill, Elsevier, Wiley, and Wolters Kluwer) formed the Coalition for Responsible Sharing⑪ following unsuccessful attempts to find ways for RG to run its service without breaching publishers’ copyright (Van Noorden, 2017). Consequently, in 2017, ResearchGate removed many copyrighted articles from public view. This circumstance could explain the data shown in Figure 2. It is not in vain that the Preprints category has been growing since 2018, probably because of this legal issue.

In the case of the document language, the results reported here show the predominance of English, which is consistent with the global distribution of languages by scholarly output. While Scopus was used as a baseline for global language distribution in academic publications, which might differ from that of other bibliographic databases (Vera-Baceta et al., 2019), this comparison provides evidence of a greater than expected number of publications in Portuguese and, especially, in Spanish. These results may reflect the high use of RG in these countries and the activity of users in South America, who make up 8% of all RG members. German, the second language in global scholarly output according to Scopus, and the place of tax residence of RG, also stands out in this regard. Otherwise, the absence of publications with RG-DOIs in languages with non-Latin alphabets (e.g. Russian, Japanese, Korean, and Chinese) is significant, and even more so when there are publications deposited in RG written and described in these languages. It is unlikely that any author from these countries will have submitted a publication to RG without requesting an RG-DOI; thus, the error could be in the detection system. Notwithstanding, Thelwall and Kousha (2014) detected the infrequent use of RG by scholars from China, South Korea, and Russia, a factor that may well be related. The distribution of RG users at the country level should help shed light on this issue. Unfortunately, RG only provides statistics of RG members by continent.

The number of publications with RG-DOIs that do not specify the language in DataCite is also remarkable (354,973). This circumstance can be considered unexpected given that, while RG does not request the language during the deposit process, it should detect the language used automatically. For this reason, it is unclear as to why this information does not appear in DataCite’s metadata.

The high percentage of discrepancies between the actual document type, the document type indicated in DataCite, and the document type displayed on the RG publication page suggests that the metadata provided by DataCite for RG-DOIs are not entirely reliable. These discrepancies are partially associated with human errors when depositing documents (e.g. year of publication and document typology), which are not verified. For example, RG-DOI “10.13140/RG.2.1.3702.8566” is displayed as “Research” on the publication page. However, the full text actually includes a journal article postprint, with its own DOI (“10.1108/IJEM-10-2014-0140”).

The need to establish moderation mechanisms has been discussed in relation to thematic repositories, especially due to the quality of the deposited works (Silagadze, 2023). Indeed, platforms such as OSF have started piloting post-moderation policies as of November 2023 to stop bot-generated, plagiarized, and self-plagiarized content from being submitted to the platform.⑫ However, giving users full freedom to describe the publications they deposit means the metadata generated cannot be used to carry out scientific studies owing to problems of consistency.

In some cases, users who deposit publications fail to include the year of publication in the document itself. For example, 42 presentations (51.2%) and 67 posters (70.5%) in the random sample do not include any publication date, but rather the authors assign the date of deposit as the date of publication in the metadata. In other cases, the inconsistency is associated with the document type. For example, authors assign a publication to the category Article but, in fact, deposit a preprint (for which there is insufficient information to determine whether it corresponds to an Article, Chapter, Conference Paper, or Report) or, vice versa, they assign the publication to the preprint category but actually deposit an Article, giving rise to copyright infringements in RG, an occurrence reported by Jamali (2017). Recent cooperation agreements and partnerships entered into between RG and different publishers (e.g. MDPI, Taylor and Francis, Emerald, American Association for the Advancement of Science, Springer, and Wiley) could help improve the quality of metadata in formal publications. However, since RG-DOIs are assigned to material that has not been formally published, it seems unlikely that DataCite will be able to provide useful data for conducting quantitative studies.

In the case of scientific impact, the percentage of publications with RG-DOIs cited is low (1.92%). However, this result is not unexpected considering the informal nature of many of the publications analyzed. Moreover, the introduction of typographical errors when entering the RG-DOI in the references, and parsing errors in the WoS’s Cited references system may well have further reduced the number of RG-DOIs cited, as occurs with all DOIs indexed in bibliographic references (Franceschini et al., 2015; Xu et al., 2019; Zhu et al., 2019).

Interestingly, 1,290 RG-DOIs extracted from WoS’s Cited References are not indexed by DataCite. Even though some of them are indeed no longer valid or have been removed, others do exist and continue to function. For example, DataCite indicates that the RG-DOI “10.13140/RG.2.2.29474.02242/1” does not exist;⑫ however, this identifier is correctly linked to a publication accessible on ResearchGate.⑬ These results confirm the existence of more RG-DOIs than those reported by DataCite, probably as a consequence of some technical error in the DOI resolver. Since DataCite is the official DOI registrar for RG, this prevents the systematic collection of all RG-DOIs created. However, it is estimated that the percentage of existing RG-DOIs not identified by DataCite is small and should not affect the general outcomes of the present study.

If we assume that those authors who decide to deposit their publications in RG and register an RG-DOI have a certain interest in circulating their work on social networks, the percentage of RG-DOIs indexed in PlumX (0.9%) is smaller than expected. To determine whether this apparently low coverage was due to an error, two data collections were carried out (May and October, 2023). In the first data collection, 10,303 RG-DOIs were found, and in the second, 10,335 (of which 10,054 were included in the first data collection). Thus, the low coverage of RG-DOIs indexed in PlumX is confirmed.

Finally, the low level of online dissemination of RG-DOIs on Twitter (now X) stands out. In PlumX’s May 2023 data collection, only 420 RG-DOIs embedded in the tweets were identified. Twitter data are no longer reported by PlumX, which means more recent data are not available.⑭ It might be the case that most users requesting RG-DOIs limit the dissemination of their Works to RG, and do not use other social media platforms; however, this hypothesis would need to be tested in future studies.

The findings demonstrate, on the one hand, the usefulness of analyzing DOIs generated by academic social networking platforms when studying the dissemination of gray academic literature, as the generated DOIs facilitate the indexing, sharing, and citation of this literature. However, on the other hand, errors found in the metadata (e.g. publication years, language, or document type) limit the accuracy of large-scale RG-DOI analyzes. Both meta-researchers and practitioners should exercise extreme caution when drawing conclusions from the analysis of RG-DOI publications unless manual data cleansing has been applied. These errors, in turn, can spread between interoperable bibliographic systems, which should not ingest RG-DOI publications metadata directly. In the same way, ResearchGate users should not rely on the metadata of these publications, especially on the ResearchGate publication pages, as they may not match the actual records deposited, which could confuse them or limit their user experience on the platform.

This study has revealed a significant number of registered RG-DOIs (1,092,934), facilitating access to, and the citation of, academic gray literature. Notably, there is a prevalence of congress materials (presentations, posters, and conference papers), reports, books, and theses. However, a decline in this function of ResearchGate has been identified, possibly attributable to the emergence of other platforms. Indeed, future research might usefully examine the registration, dissemination, and citation of informal publications via alternative platforms, most obviously Zenodo, OSF, and Figshare.

A small percentage of publications with RG-DOIs are cited in WoS-indexed publications, underscoring their utility in scholarly discourse. The 41,492 citations identified are deemed relevant, considering that publications with RG-DOIs constitute document types that are infrequently cited. Future research should seek to characterize these citing publications to ascertain whether the citation of these materials is increasing, and to determine the relevance of the formal sources citing them, the languages of the citing documents, and the rate of self-citation.

The societal impact of publications with RG-DOIs lags behind their scientific impact, as evidenced by their low coverage in PlumX. Employing other aggregators (e.g. Altmetric.com) might yield different results; again, something future studies might be usefully considered. Noteworthy is the interest these publications have garnered in Mendeley, where the number of Reads points to their use in bibliographies, especially among students. However, these works have only limited dissemination on Twitter, an aspect that needs to be further analyzed.

The metadata provided by DataCite are inadequate for conducting quantitative studies of RG-DOIs. This is attributable, on the one hand, to ResearchGate’s automated DOI registration system, and, on the other, to the intervention of potentially non-expert researchers in the provision of scientific information. The lack of verification, in the first instance, and of adequate content curation, in the second, results in inconsistencies in the metadata that impact the year of publication and document type. Additionally, discrepancies are evident in ResearchGate’s official documentation, which seems to permit, on occasions, the generation of DOIs for posters and changes to the document type following DOI registration, thus contradicting the site’s official directives. It is recommended, therefore, that the ResearchGate team updates its information.

Finally, the availability of an API would alleviate dependence on DataCite for gathering bibliographic information on publications with RG-DOIs. It would also provide access to usage and impact metrics– aspects not addressed in this study–that might shed more light on the use of these materials within the scientific community.