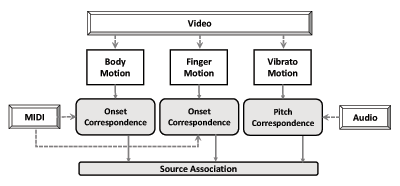

Figure 1

Outline of the proposed universal source association system for chamber ensemble performances. Three types of motion are modeled and correlated with the audio and score events in three modules.

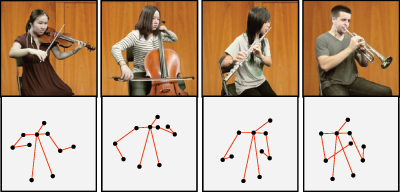

Figure 2

Body motion extraction. Upper body skeletons (second row) are extracted with OpenPose (Cao et al., 2017) in each video frame (first row) followed by temporal smoothing.

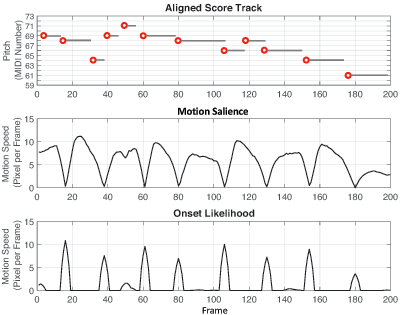

Figure 3

Example correspondence between body motion and note onsets. Top: temporally aligned score part with onsets marked by red circles. Middle: extracted motion salience (primarily bowing motion) from the visual performance of a violin player. Bottom: derived onset likelihood curve from the motion salience.

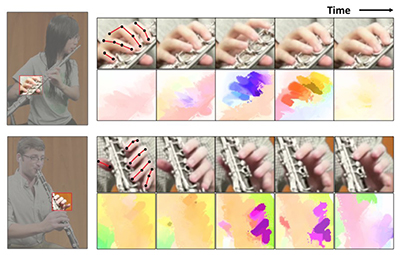

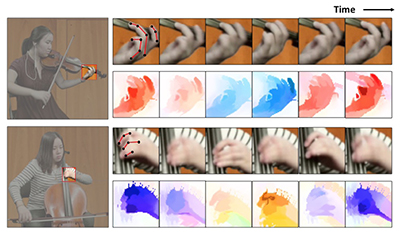

Figure 4

Optical flow visualization of finger motion in five consecutive frames corresponding to note changes. The color encoding scheme is adopted from Baker et al. (2011).

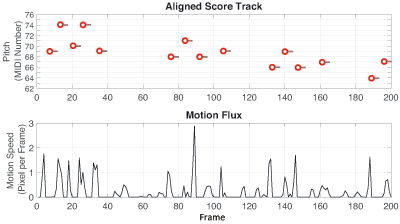

Figure 5

Example correspondence between finger motion and note onsets of a flute player. Top: temporally aligned score part with onsets marked by red circles. Bottom: extracted motion flux from finger movements.

Figure 6

Optical flow visualization of hand motion corresponding to vibrato articulation. The color encoding scheme is adopted from Baker et al. (2011).

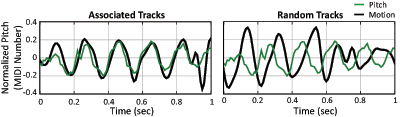

Figure 7

The same segment of normalized pitch contour f(t) (green) overlaid with the motion displacement curve d(t) (black) from the associated track (left) and another random track (right).

Table 1

The number of pieces for different instrument arrangements from the original and expanded URMP dataset.

| String | Wind | Mixed | Total | ||

|---|---|---|---|---|---|

| Original | Duet | 2 | 6 | 3 | 11 |

| Trio | 2 | 6 | 4 | 12 | |

| Quartet | 5 | 6 | 3 | 14 | |

| Quintet | 2 | 4 | 1 | 7 | |

| Expanded | Duet | 57 | 91 | 23 | 171 |

| Trio | 41 | 65 | 20 | 126 | |

| Quartet | 15 | 25 | 7 | 47 | |

| Quintet | 2 | 4 | 1 | 7 |

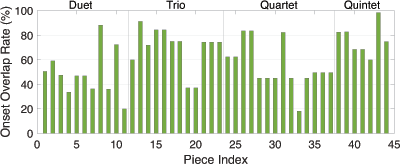

Figure 8

Onset overlap rate for each piece from the original URMP dataset.

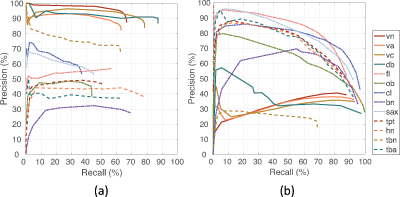

Figure 9

Onset detection evaluation results from: (a) body motion, and (b) finger motion, for different instruments.

Table 2

The number of evaluation samples with different length and instrumentation for source association.

| String | Excerpt duration (sec) | |||||

|---|---|---|---|---|---|---|

| 5 | 10 | 15 | 20 | 25 | 30 | |

| Duet | 1323 | 642 | 420 | 303 | 236 | 200 |

| Trio | 1044 | 506 | 333 | 240 | 189 | 158 |

| Quartet | 355 | 172 | 114 | 82 | 65 | 54 |

| Quintet | 64 | 31 | 21 | 15 | 12 | 10 |

| Wind | Excerpt duration (sec) | |||||

| 5 | 10 | 15 | 20 | 25 | 30 | |

| Duet | 1809 | 887 | 557 | 435 | 323 | 266 |

| Trio | 1275 | 626 | 391 | 309 | 229 | 187 |

| Quartet | 474 | 232 | 145 | 115 | 86 | 68 |

| Quintet | 66 | 32 | 20 | 16 | 12 | 9 |

| Mixed | Excerpt duration (sec) | |||||

| 5 | 10 | 15 | 20 | 25 | 30 | |

| Duet | 441 | 203 | 141 | 96 | 82 | 60 |

| Trio | 380 | 174 | 121 | 82 | 70 | 51 |

| Quartet | 199 | 92 | 64 | 44 | 37 | 28 |

| Quintet | 22 | 10 | 7 | 5 | 4 | 3 |

Figure 10

(a)–(c): Source association accuracy only using onset correspondence between score parts and body motion (the first component in Eq. (5)). (d)–(f): Source association accuracy only using onset correspondence between score parts and finger motion (the second component in Eq. (5)).

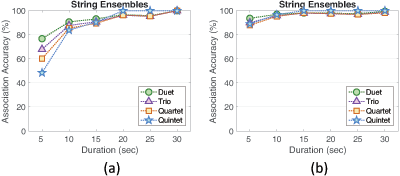

Figure 11

Source association accuracy of string ensembles by (a) only using vibrato correspondence between pitch fluctuation and hand motion ( in Eq. (5)), and (b) combining vibrato correspondence with onset correspondence from body motion ( and in Eq. (5)).

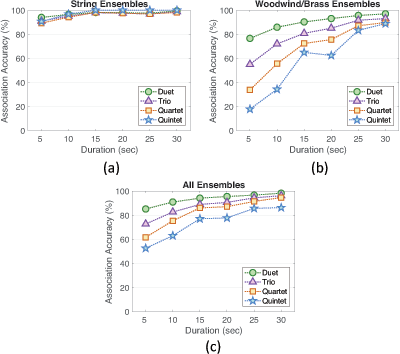

Figure 12

Source association accuracy of ensembles with different instrumentation using all of the three modules: onset correspondence from body motion, onset correspondence from finger motion, and vibrato correspondence from hand motion (Eq. (5)).