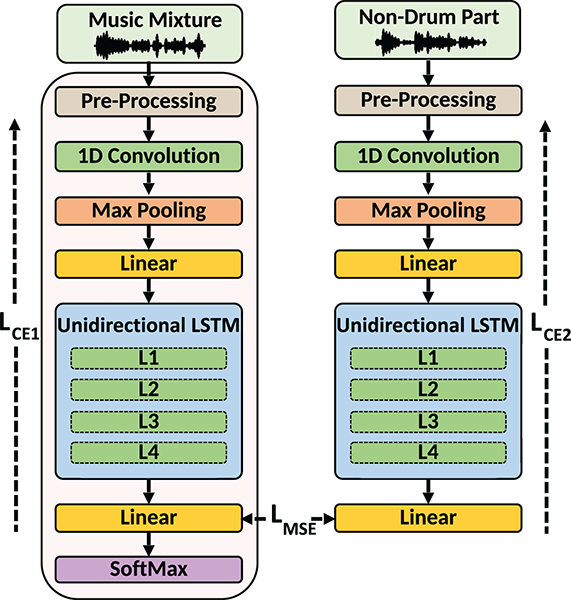

Figure 1

Neural structure of BeatNet+ for general music rhythm analysis. Both the main (left) and auxiliary (right) branches are initialized randomly and trained jointly, but only the main branch is utilized for inference.

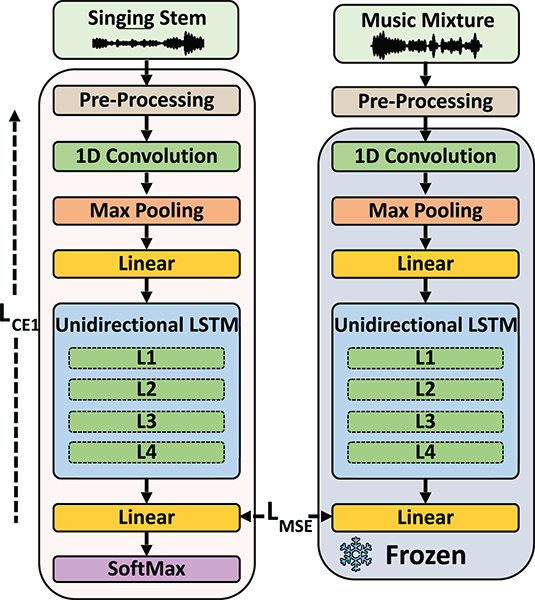

Figure 2

Neural structure of the auxiliary‑freezing (AF) adaptation approach for singing voice rhythm analysis. The main branch (left) is initialized randomly and trained for real‑time inference, while the auxiliary branch (right) is initialized with the pre‑trained BeatNet+ main branch weights and remains frozen during training.

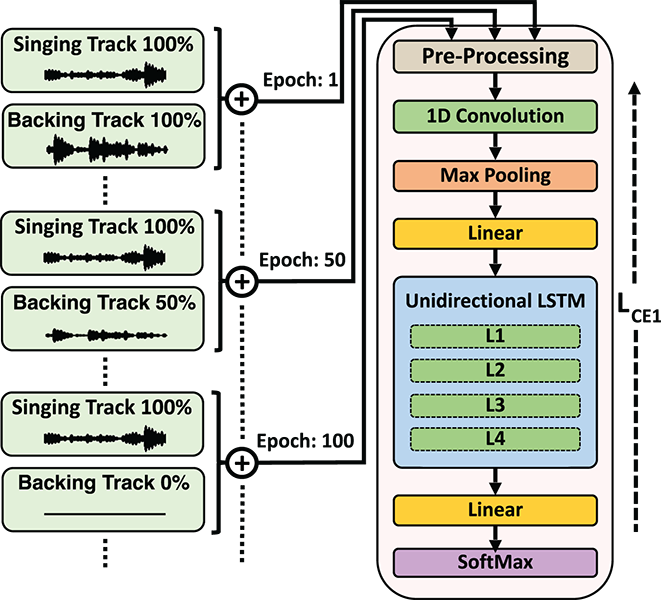

Figure 3

Illustration of the guided fine‑tuning (GF) approach for singing voice rhythm analysis. The model is initialized with the pre‑trained BeatNet+ main branch weights and fine‑tuned using music mixtures with backing music gradually removed over training epochs.

Table 1

Datasets used in our experiments.

| Dataset | Number of pieces | Number of vocals | Labels | Train | Validation | Test |

|---|---|---|---|---|---|---|

| Ballroom | 699 | 452 | Original | ✓ | ✓ | ✗ |

| GTZAN | 999 | 741 | Original | ✗ | ✗ | ✓ |

| Hainsworth | 220 | 154 | Original | ✓ | ✓ | ✗ |

| Rock Corpus | 200 | 315 | Original | ✓ | ✓ | ✗ |

| MUSDB18 | 150 | 263 | Added | ✓ | ✓ | ✗ |

| URSing | 65 | 106 | Added | ✓ | ✓ | ✗ |

| RWC jazz | 50 | 0 | Revised | ✓ | ✓ | ✗ |

| RWC pop | 100 | 188 | Revised | ✓ | ✓ | ✗ |

| RWC‑Royalty‑free | 15 | 29 | Revised | ✓ | ✓ | ✗ |

Table 2

Results of online rhythm analysis evaluation for generic music and offline state‑of‑the‑art references, showcasing F1 scores in percentages with a tolerance window of ms, latency, and RTF for the GTZAN dataset.

| Metrics (performance on full mixtures) | ||||

|---|---|---|---|---|

| Method | Beat F1  (70 ms) (70 ms) | Downbeat F1  (70 ms) (70 ms) | Latency  (ms) (ms) | RTF  |

| Online models | ||||

| BeatNet+ | 80.62 | 56.51 | 20 | 0.08 |

| BeatNet+ (Solo) | 78.43 | 49.74 | 20 | 0.08 |

| BeatNet (Heydari et al., 2021) | 75.44 | 46.69 | 20 | 0.06 |

| Novel 1D (Heydari et al., 2022) | 76.47 | 42.57 | 20 | 0.02 |

| IBT (Oliveira et al., 2010) | 68.99 | – | 23 | 0.16 |

| Böck FF (Böck et al., 2014) | 74.18 | – | 46 | 0.05 |

| BEAST (Chang and Su, 2024) | 80.04 | 52.23 | 46 | 0.40 |

| Offline models | ||||

| Transformers (Zhao et al., 2022) | 88.5 | 71.4 | – | – |

| SpecTNT‑TCN (Hung et al., 2022) | 88.7 | 75.6 | – | – |

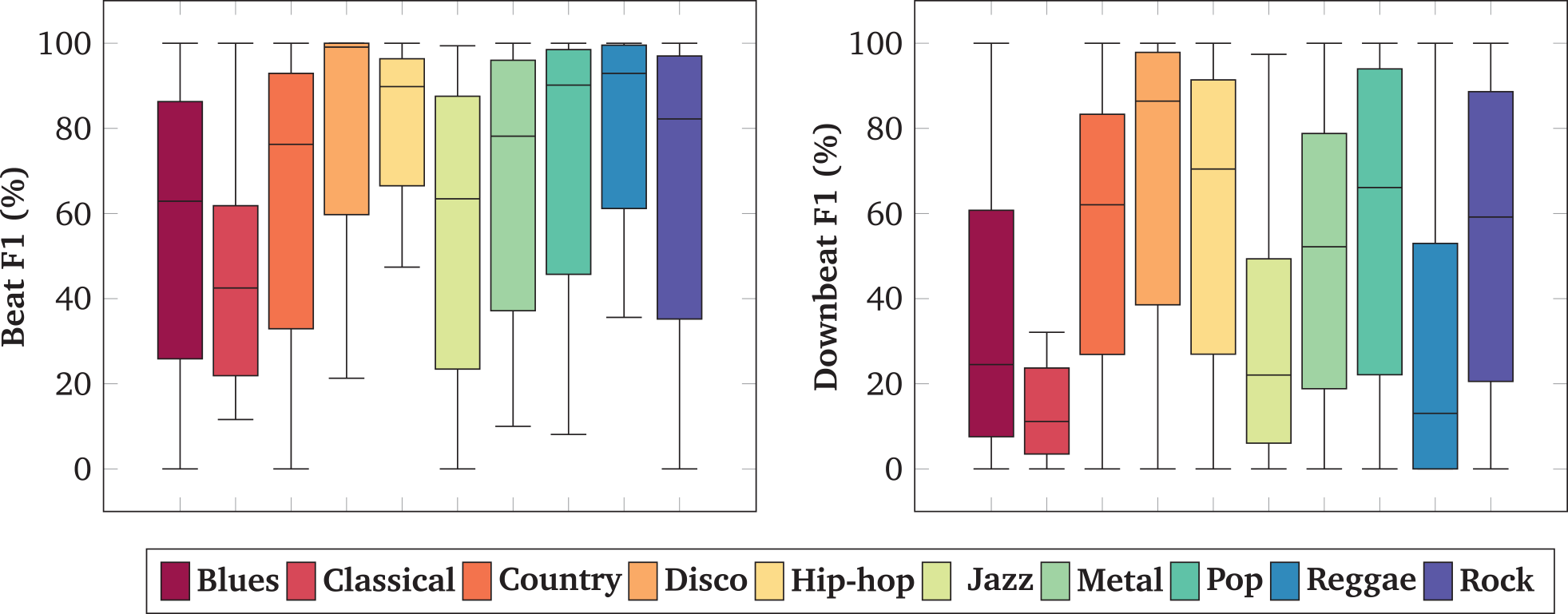

Figure 4

F1 scores for beat tracking and downbeat tracking of the BeatNet+ model across diverse genres within the GTZAN dataset.

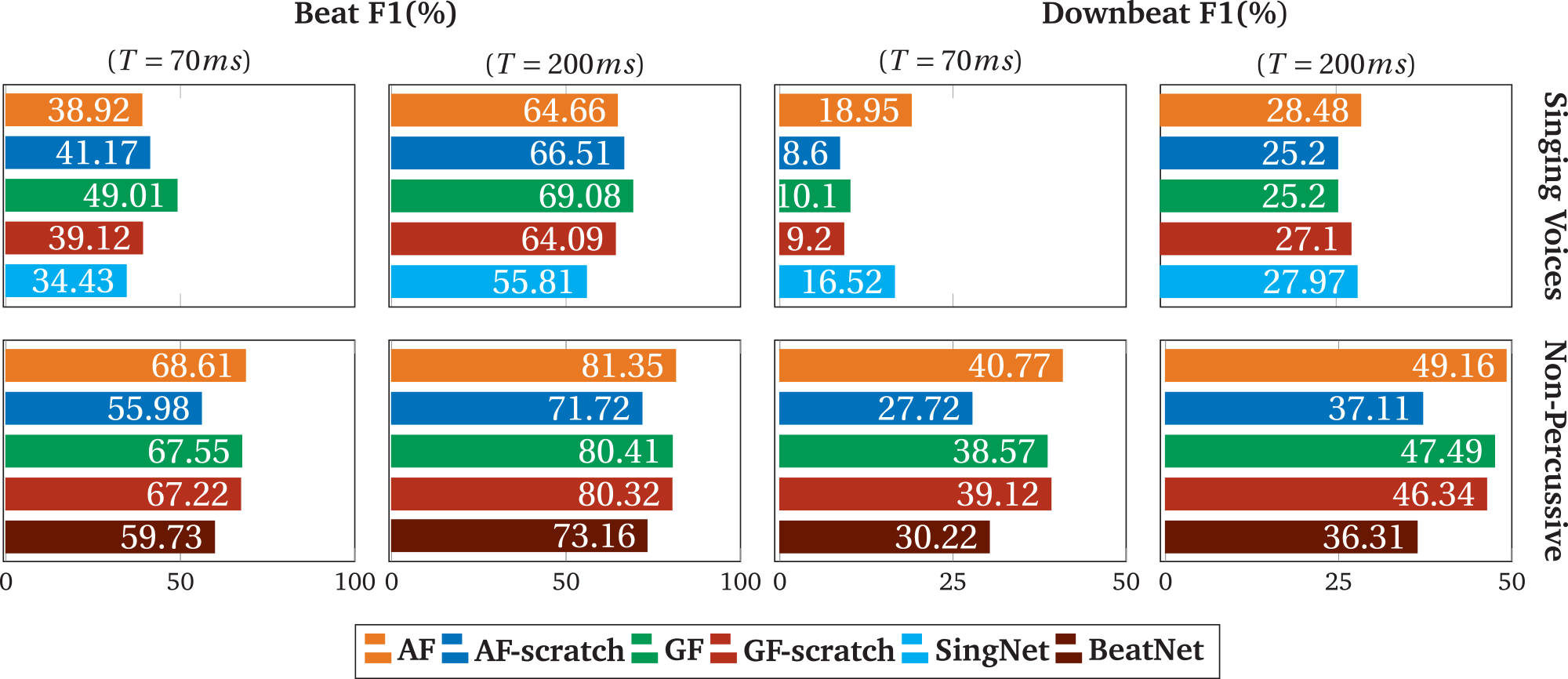

Figure 5

F1 scores of online rhythm analysis models on singing voices (top row) and non‑percussion music (bottom row) with two tolerance windows, 70 ms and 200 ms.